Campus Gateway Deployment Guide

Introduction

The Cisco Campus Gateway (CG) is the cloud-native solution from Cisco for centralized wireless deployments. Similar to a wireless LAN controller (WLC), data plane traffic is tunneled from the APs to the CG, where it is terminated and switched to the rest of the network. By terminating traffic centrally, client VLANs only have to exist in the core. This allows for seamless roaming across layer 3 (L3) domains of the APs.

Why is a centralized deployment needed?

Traditionally, Cisco cloud-managed wireless deployments are distributed deployments with each AP locally switching client traffic via a layer 2 (L2) trunk port to the access switch. Because of this, the client VLANs are required to span across all the access switches where a seamless roam is required. Otherwise, the client would need to get a new IP address, resulting in a broken session. This method of deployment is completely sufficient for small to medium sized deployments, such as branch offices or buildings, where the same VLAN can be shared and spanned across all the switches in the network to create an L2 roaming domain.

However, as cloud-manged wireless deployments grow in size and start getting into the medium to large campuses, ex. University campuses, the limits of a distributed wireless design are reached. Spanning the entirety of the L2 VLANs across the access switches creates a large broadcast domain, and the number of wireless clients will begin to overwhelm the ARP and MAC address tables of the access switches. Every wireless client needs to be in the tables for every access switch that the L2 VLAN is defined. If one client roams between APs, each switch would need to update their respective tables. This is a CPU-intensive task. Not to mention the pains with dealing with spanning tree implication and with BUM (Broadcast, Multicast, and Unknown unicast traffic) in a large L2 network. Thus, when 1000s and 1000s of clients are roaming, there will be an immense utilization on every switch’s CPU. Enough of this may lead to the switch outright failing. This led to a need to create a centralized deployment in order to minimize this.

By centralizing the wireless traffic at Campus Gateway, it allows the client VLANs to only exist on the connection between the CG and the upstream switch. This is typically the core/distribution switch, which has much greater scale and capacity when compared to the access switches. Limiting the client VLANs to the upstream switch has the added benefit of containing broadcast domain for these to a much smaller area.

Additionally, Campus Gateway deployments leverages an overlay design. Rather than having to redesign the underlay switching network to account for the wireless traffic being switched via L2 trunks at the AP level, traffic is tunneled from AP to the CG. As long as the APs and CG have IP connectivity, the control plane and data plane tunnels will be able to form, meaning very little changes on the wired side are needed (for more information on the tunnels please navigate How do the devices communicate with each other and Dashboard? section). Moving from a traditional customer-managed, Catalyst wireless deployment should be as simple as migrating to Cloud-Managed APs and replacing the WLC with CG.

How is Campus Gateway different from MX?

Although MX is still an option in Dashboard as a wireless concentrator to tunnel wireless client traffic to a centralized box, it is not a recommended deployment for a Large Campus deployment. MX as a concentrator has various scale limitations and has not been tested for large wireless deployments. Campus Gateway architecture is different with different scalability and tunneling technologies. These are all implemented in hardware, making it ideal to scale to Large Campus requirements. Additionally, CG provides unique features like a centralized client database for seamless roaming, RADIUS proxy, mDNS gateway, etc., which are not available in MX.

Architecture of a Campus Gateway Deployment

While Campus Gateway does serve the same purpose in terms of client traffic aggregation as WLC and shares the same hardware as the CW9800H1 WLC, this is not a cloud-managed WLC. Rather it is a purpose-built appliance for data plane aggregation and real time control plane services for wireless traffic. Campus Gateway follows the idea of “Decentralize when you can, centralize when you must”.

In order to meet the large-scale roaming needs for customers, certain aspects of the wireless networks need to be brought back to a centralized box on-premises. Things like data plane aggregation, RADIUS proxy and Mobility database need to exist on the CG. Otherwise, unless the control plane and management plane tasks need to be centralized, Dashboard and the APs will continue to serve those functions. Management plane tasks, like configuration management and software management, and non-real time control plane tasks, like AutoRF, Air Marshal, and Rogues, do not need to be done by the CG. It can be kept on the cloud.

The following image illustrates the breakdown of these functions.

How do the devices communicate with each other and Dashboard?

Management Plane

Like existing cloud-managed devices, Campus Gateway and APs will each be managed directly and natively by Dashboard. This is the same as the MR, MS, MX, and other cloud-managed Cisco devices today. Each device will have its own Meraki Tunnel connection to the cloud. The CG does not serve any management function as it is an overlay L3 tunnel solution for bridging client traffic into the network core.

Control Plane

The control plane (CP) tunnel between Campus Gateway and APs will leverage the QUIC protocol. The CP tunnel will be used to carry messages like AP registration, client anchoring, etc. Also, it will be utilized to carry packets for high availability messages and inter-CG traffic.

The QUIC protocol is a UDP-based transport protocol developed to be as reliable as TCP without suffering from the overhead associated with TCP. TCP suffers from head-of-line blocking where the messages sent are a single byte stream, so if one large packet needs to be retried, all subsequent packets in the stream are blocked until the packet is successfully sent. For QUIC, traffic is sent in multiple streams. If one packet is lost, only the stream which the packet is a part of is affected while the rest will continue to operate as normal.

The QUIC tunnels are secured via TLS encryption with Dashboard-provisioned keys or certificates. Each AP will establish a QUIC tunnel to each CG in the cluster with regular keepalives being sent to check the status of the Primary and Backup CG in the cluster.

The QUIC tunnel will operate on UDP port 16674 on both Campus Gateway and AP.

Data Plane

The data plane (DP) tunnel between Campus Gateway and APs will leverage the Virtual eXtensible Local Area Network (VXLAN) protocol. These will be stateless and transient tunnels, relying on per-packet encapsulation. The encapsulation used will be the standard VXLAN encapsulation. The tunnel will be used to carry client data packets which are then bridged onto the client VLANs at the CG. The tunnel header will carry the QoS and policy information (e.g., DSCP, SGT, VRF) while RADIUS messages will also be carried inside the tunnel.

There is the possibility for two flows between the AP and CG to have vastly different network paths. To identify which connections belong to which AP. To combat this, there is a monitoring check implemented on the VXLAN connection. This uses the bidirectional forwarding detection (BFD) mechanism for VXLAN (for more information, see RFC8971).

The VXLAN tunnel will operate on UDP port 16675 on both Campus Gateway and AP. If the VXLAN port needs to be changed, please contact Meraki support.

Path MTU Discovery

The Path MTU calculation between the APs and Campus Gateway is done via the QUIC tunnel, and it will be calculated for both the QUIC and VXLAN tunnels. For the VXLAN tunnel, the do not fragment (DF) bit is always set in the Outer IP header.

Before adding the VXLAN encapsulation over the client traffic, both the AP and Campus Gateway will fragment the inner IP packet (client traffic) if the total packet size, including the VXLAN encapsulation, exceeds the Path MTU. In the event the inner IPv4 packet has the DF bit set, and the total packet size is too big, the AP/Campus Gateway will send an ICMP Too Big message back to the sender.

How big of a packet can be sent?

Let's assume the Path MTU calculated is 1500 bytes. The overhead from the VXLAN tunnel is 50 bytes:

- Outer IP: 20 bytes

- UDP: 8 bytes

- VXLAN: 8 bytes

- MAC: 14 bytes

20 bytes + 8 bytes + 8 bytes + 14 bytes = 50 bytes

Thus, the largest IP packet that can be transmitted without fragmentation would be 1450 bytes. If TCP traffic is sent, the AP will implement TCP MSS (Maximum Segment Size) adjust to avoid fragmentation. This means that the largest frame would be adjusted to:

- IPv4 Traffic: 1410 bytes (40 bytes less)

- IPv6 Traffic: 1390 bytes (60 bytes less)

Note: Jumbo frames on APs are not supported currently.

Overview of Campus Gateway

Scale and Throughput

|

Scale (Standalone or Cluster) |

|

|---|---|

|

Access Points |

5000 |

|

Clients |

50,000 |

|

Throughput |

|

|---|---|

|

Standalone |

Up to 100 Gbps |

|

Cluster of Two |

Up to 200 Gbps |

Access Point Software and Support

Cloud-managed Access Points are required to run MR31.2.X code or newer.

| Wi-Fi Generation | Models |

|---|---|

| Wi-Fi 7 1 |

|

| Wi-Fi 6E |

|

| Wi-Fi 6 |

|

1 Wi-Fi 7 APs will only be supported in 802.11be operation starting with MR 32.1.X and later.

2 CW9172H / MR36H wired ports will not be supported for tunneling at first release.

3 APs in repeater mode do not currently support tunneling to Campus Gateways.

License Requirements

Campus Gateway will not require a dedicated license, but it is required for the APs to have an Enterprise/Essentials or Advanced/Advantage tier license. Organizations are required to be using either co-termination Meraki subscription, or Cisco Networking subscription licensing.

Front View

|

1 |

Power LED |

|---|---|

|

2 |

System LED |

|

3 |

Alarm LED |

|

4 |

Dashboard Status LED |

|

5 |

M.2 SSD |

|

6 |

RJ-45 compatible console port (Not available for use on Campus Gateway) |

|

7 |

USB Port 0 (Not available for use on Campus Gateway) |

|

8 |

USB Port 1 (Not available for use on Campus Gateway) |

|

9 |

SP— RJ-45 1 GE management port |

|

10 |

RP— 1/10-GE SFP+ port |

|

11 |

TwentyFiveGigE0/2/0 - 25-GE SFP28 EPA2 Port 0 |

|

12 |

TwentyFiveGigE0/1/0 - 25-GE SFP28 EPA1 Port 0 |

|

13 |

TwentyFiveGigE0/1/1 - 25-GE SFP28 EPA1 Port 1 |

|

14 |

TwentyFiveGigE0/1/2 - 25-GE SFP28 EPA1 Port 2 |

|

15 |

Te0/0/0—1-GE SFP/ 10-GE SFP+ Port 0 |

|

16 |

Te0/0/2—1-GE SFP/ 10-GE SFP+ Port 2 |

|

17 |

Te0/0/4—1-GE SFP/ 10-GE SFP+ Port 4 |

|

18 |

Te0/0/6—1-GE SFP/ 10-GE SFP+ Port 6 |

|

19 |

Carrier Label Tray |

|

20 |

Te0/0/7—1-GE SFP/ 10-GE SFP+ Port 7 |

|

21 |

Te0/0/5—1-GE SFP/ 10-GE SFP+ Port 5 |

|

22 |

Te0/0/3—1-GE SFP/ 10-GE SFP+ Port 3 |

|

23 |

Te0/0/1—1-GE SFP/ 10-GE SFP+ Port 1 |

|

24 |

RP— RJ-45 1 GE redundancy port |

|

25 |

CON— 5-pin Micro-B USB console port (Not available for use on Campus Gateway) |

LED Indicators

|

System Status |

Power LED |

Dashboard Status LED |

System LED |

Alarm LED |

|

|---|---|---|---|---|---|

|

1 |

CG is powered off or not receiving power. |

Off |

Off |

Off |

Off |

|

2 |

CG if powered on and is loading the bootstrap firmware. |

Solid Green |

No change in LED state |

No change in LED state |

Solid Amber: ROMMON boot |

|

3 |

ROMMON Boot complete |

No change in LED state |

No change in LED state |

No change in LED state |

Solid Green |

|

4 |

CG is loading the operating system. |

No change in LED state |

No change in LED state |

Slow Blinking Green |

Solid Amber: SYSTEM bootup |

|

5 |

Boot up fails; device in ROMMON |

No change in LED state |

No change in LED state |

Solid Amber - Crash Slow Blinking Amber - Secure boot failure |

Slow Blinking Amber - Temperature Error & Secure boot failure |

|

6 |

System error: CG failed to load operating system or bootstrap firmware. |

No change in LED state |

No change in LED state |

Solid Amber - Crash Slow Blinking Amber - Secure boot failure |

Slow Blinking Amber - Temperature Error & Secure boot failure |

|

7 |

Setting up local management and cloud-connectivity requirements. Note: If the CG has not been assigned a static management IP previously, it will try to obtain an IP via DHCP in this stage. |

No change in LED state |

Slow blinking Amber |

No change in LED state |

No change in LED state |

|

8 |

System error: CG failed to complete local provisioning. |

No change in LED state |

Solid Amber |

No change in LED state |

No change in LED state |

|

9 |

CG is completely provisioned but unable to connect to Meraki cloud |

No change in LED state |

Solid Amber |

No change in LED state |

No change in LED state |

|

10 |

Firmware download / upgrade in progress |

No change in LED state |

Blinking Green: System upgrade in progress |

No change in LED state |

No change in LED state |

|

12 |

CG is fully operational and connected to the Meraki cloud |

Solid Green |

Solid Green |

No change in LED state |

No change in LED state |

|

13 |

Operating System boot complete |

No change in LED state |

No change in LED state |

Solid Green |

No change in LED state |

|

14 |

Factory Reset via the Local Status Page |

No change in LED state |

No change in LED state |

Slow Blinking Red |

No change in LED state |

|

15 |

Blink LED Feature |

No change in LED state |

Fast Blinking Red |

Fast Blinking Red |

Fast Blinking Red |

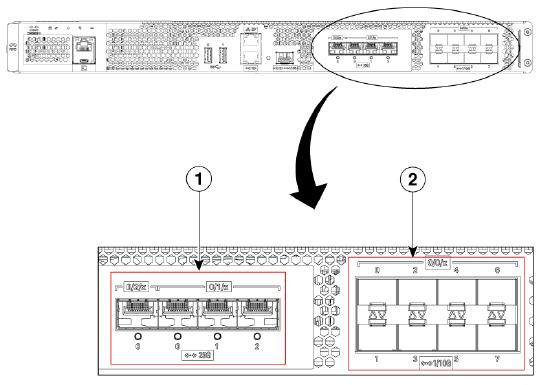

Built-In SFP and SFP+ Ports

| 1 |

Bay 1 - 3 X 25-GE SFP28 ports.

Bay 2—1 X25-GE SFP port.

|

|---|---|

| 2 | Bay 0—8 X 1-GE/10-GE SFP+ ports.

|

Supported SFP Modules

Data Port SFP Modules

| Type |

Modules supported |

|---|---|

|

Small Form-Factor Pluggable (SFP) |

GLC-LH-SMD |

|

GLC-SX-MMD |

|

|

GLC-TE |

|

|

GLC-ZX-SMD |

|

|

GLC-BX-U |

|

|

GLC-BX-D |

|

|

GLC-EX-SMD |

|

|

Enhanced SFP (SFP+ / SFP28) |

SFP-10G-SR |

|

SFP-10G-SR-S |

|

|

SFP-10G-LR |

|

|

SFP-10G-LR-X |

|

|

SFP-10G-ER |

|

|

SFP-H10GB-ACU10M |

|

|

SFP-H10GB-CU5M |

|

|

SFP-10G-AOC10M |

|

|

Finisar-LR (FTLX1471D3BCL) |

|

|

Finisar-SR (FTLX8574D3BC) |

|

|

SFP-H10GB-CU1M |

|

|

SFP-H10GB-CU1-5M |

|

|

SFP-H10GB-CU2M |

|

|

SFP-H10GB-CU2-5M |

|

|

SFP-H10GB-CU3M |

|

|

SFP-H10GB-ACU7M |

|

|

SFP-10G-AOC1M |

|

|

SFP-10G-AOC2M |

|

|

SFP-10G-AOC3M |

|

|

SFP-10G-AOC5M |

|

|

SFP-10G-AOC7M |

|

|

SFP-10/25G-CSR-S (Only supported in the 25-GE ports) |

|

|

SFP-10/25G-LR-S (Only supported in the 25-GE ports) |

|

|

SFP-25G-SR-S |

|

|

SFP-25G-AOC2M |

|

|

SFP-25G-AOC10M |

|

|

SFP-25G-AOC5M |

|

|

SFP-H25G-CU1M |

|

|

SFP-H25G-CU5M |

|

|

SFP-25G-AOC3M |

|

|

SFP-25G-AOC7M |

|

|

SFP-25G-AOC1M |

Supported Redundancy Port SFP Modules

| Type |

Modules supported |

|---|---|

|

SFP/SFP+ Modules |

GLC-LH-SMD |

|

GLC-SX-MMD |

|

|

SFP-10G-SR |

|

|

SFP-10G-SR-S |

|

|

SFP-10G-LR |

|

|

SFP-10G-LR-S |

Deploying Campus Gateway

In order to deploy Campus Gateway and tunnel SSIDs to it, the network is required to be upgraded to MR31.2.X / MCG31.2.X code or later. This will ensure that all APs are upgraded to MR31.2 or later.

Please note that the firmware option for MR31.2.X / MCG31.2.X will not appear until the CG is added to the network. The upgrade should be done after adding the CG to the network but before configuring the SSIDs to tunnel to CG.

Note: APs in the same Meraki Network that cannot be upgraded to MR 31.2.X or later (for example Wi-Fi 5 APs and earlier) will stay on the latest supported code. These APs will not be able to tunnel SSIDs to Campus Gateway.

Note: At launch, APs and Campus Gateway should be part of the same geographical location with a RTT of less than 20ms.

Check and Configure Upstream Firewall Settings

If a firewall is in place, it must allow outgoing connections on particular ports to particular IP addresses. The most current list of outbound ports and IP addresses for your particular organization can be found under Help → Firewall info. The help button is located on the top right corner of any dashboard page. For more information refer to Upstream Firewall Rules for Cloud Connectivity.

Additionally, if there is a firewall in place between Campus Gateway and APs, please ensure that UDP port 16674 for QUIC and UDP port 16675 for VXLAN are open.

Note: If the VXLAN port needs to be changed, please contact Meraki support.

Onboarding the CG

Upstream Switch Connection

The Campus Gateway is a one-armed concentrator with a single logical link to the upstream network. This link will consist of multiple physical links operating in a single port-channel with LACP, and it will operate as a Layer 2 trunk. All the traffic (management, control, and data) will use this link and is separated using VLANs.

It is always recommended to connect at least two physical ports on Campus Gateway to provide link redundancy. Even if only one port is connected, it is mandatory to configure the upstream switch with an Etherchannel with one port to allow LACP negotiation.

Recommended Upstream Switches

Full Scale

In order to take advantage of the full scale of the Campus Gateway, the upstream switch must have MAC and ARP tables that scale higher than the 50K client scale on the Campus Gateway. The Cisco recommendations along with their scale are shown below.

| Switch Family | MAC Table Scale | ARP Table Scale | Meraki Cloud Monitoring Support | |

|---|---|---|---|---|

| Cisco Catalyst 9600 | C9600X-SUP-2 (S1 Q200 based) |

256K |

128K |

|

|

C9600-SUP-1 (UADP 3.0 based) |

128K |

90K |

||

| Cisco Catalyst 9500 |

UADP 3.0 based |

82K |

90K |

x |

|

UADP 2.0 based |

64K |

80K |

x |

|

| Cisco Catalyst 9500X |

256K |

256K |

x |

|

| Cisco Nexus 7000/7700 |

M3 Line Cards |

384K |

||

|

F3 Line Cards |

64K |

|||

| Cisco Nexus 9500 |

90K |

90K (60 v4 / 30 v6) |

||

| Cisco Nexus 9300 | 9300 Platforms | 90K | 65K (40K v4 / 25K v6) | |

| 9300-FX/X/FX2 | 92K | 80K (48K v4 / 32K v6) | ||

| Cisco Nexus 9200 | 96K | 64K (32K v4 / 32K v6) | ||

Partial Scale

If only a partial scale is needed, a lower-capacity switch can be used. However, as the scale increases, the limits of the MAC and ARP tables for those switches will be reached.

The Cisco Catalyst 9300 and 9300-M models support up to 35000 MAC addresses, which is not sufficient for a full-scale Campus Gateway.

Recommended Switch Topology

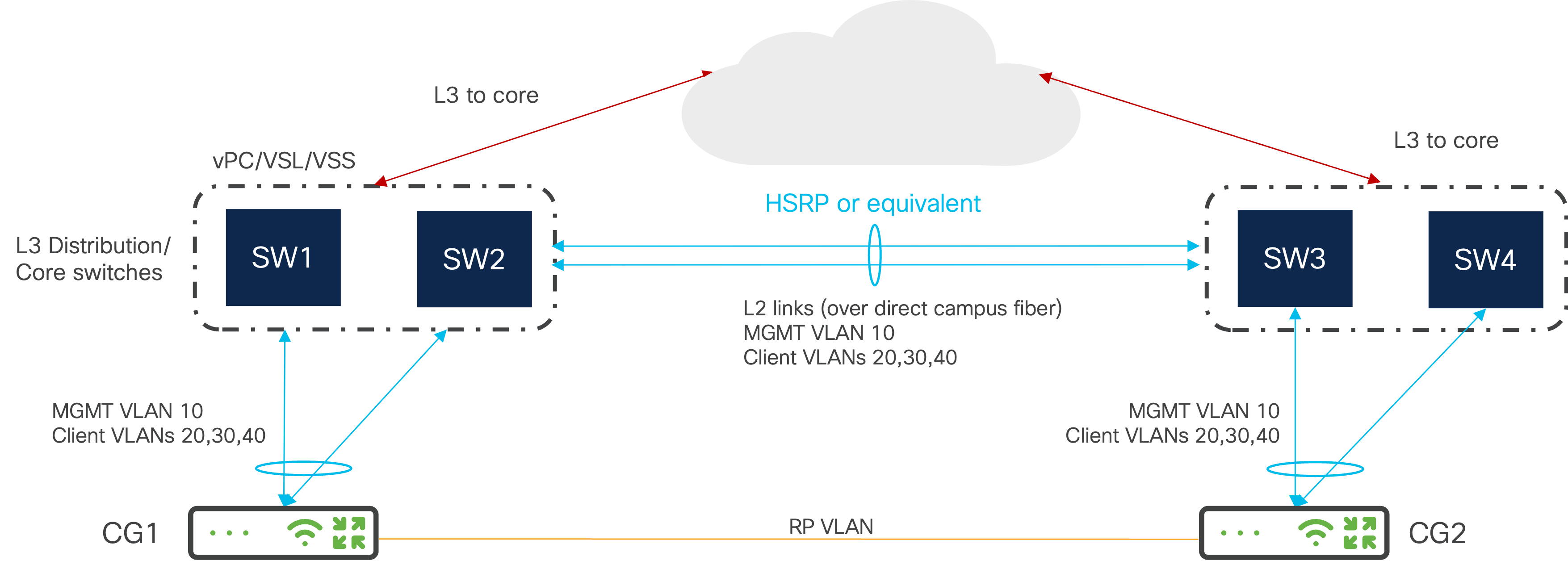

There are two supported upstream switch topologies. For both, a switch deployment where multiple switches will act as a single logical switch (ex. VSS, SVL, vPC) or in HSRP. If there will be multiple Campus Gateways deployed (this will be a max of two in a cluster), the uplink switch will have a unique port-channel configured for each Campus Gateway.

Option 1 using Split Links

In Option 1, the connection for each Campus Gateway to the upstream switch stack will utilize split links to each switch in the stack. For each Campus Gateway, there will be an equal number of links to each switch in the stack with all the links being placed in a single port-channel.

This provides resiliency for each CG as there is link redundancy for each CG to the upstream switch, and in the case of failure in one of the switches, the CGs still have a connection to the rest of the network via the remaining switches. The drawback would be that the total throughput per CG would decrease as there would be less active links since the switch failed.

This would be the best option if keeping the number of CG chassis up in a cluster is more important than throughput.

Option 2 without Split Links

In Option 2, the connection for each Campus Gateway to the upstream switches will not utilize split links to each switch. In this case, the switches can be a logical stack, like StackWise Virtual, VSS, etc., or a pair of standalone switches configured in HSRP. Each Campus Gateway will connect all its links to a specific switch in the stack and form a single port-channel. Choose this option if the customer doesn't want to rely on a stack technology and the distribution/core switches are in standalone mode.

This provides resiliency for each CG as there is link redundancy for each CG to the upstream switch, and in the case of failure in one of the switches, the remaining CGs still have a connection to the rest of the network via the remaining switches with full throughput capabilities. The drawback would be that if a switch fails, the CG connected to that switch would also be considered down as it would have no way to communicate to the rest of the network. The APs will have to failover to the other CG in the cluster, so wireless traffic is affected.

This would be the best option to have the highest throughput available as opposed to the number of CG chassis up.

Uplink connection default Behavior

For the initial boot and for Dashboard registration, it’s critical to connect the Campus Gateway to a switch via at least one port configured with a 802.1Q trunk connection that has at least one active VLAN. The VLAN needs to provide an IPv4 DHCP address to CG with related DNS servers so that it can automatically reach Dashboard. This VLAN with active DHCP can be a 802.1Q tagged VLAN or the native VLAN on the trunk port. Once the CG is configured via the cluster onboarding flow, the user can change the CG’s IP address to a static IP in the same or different VLAN. It is recommended to use a 802.1Q tagged VLAN to carry the management and client traffic.

Note: IPv6 for the Campus Gateway interfaces is not supported at currently, so it’s important to provide an IPv4 address.

By default, when the Campus Gateway boots up, all ports (4x25G and 8x10G) will be configured to be in a single port-channel. This means that before wiring up the CG to the rest of the network, the ports on the upstream switch will need to be configured in a port-channel to allow the CG to come up. At least 2 ports are recommended for CG to upstream switch connections.

Note: It’s important that you use only ports of the same speed and type to connect to the switch etherchannel, so either all 10GE or all 25GE ports.

Port-Channel Configuration

The default port-channel configuration on the CG, which is applied to all the ports, is shown below for the port TwentyFiveGigE0/1/0. By default, all VLANs will be allowed on the ports with a native VLAN ID of 1.

port-channel load-balance src-dst-mixed-ip-port

!

interface TwentyFiveGigE0/1/0

switchport mode trunk

channel-group 1 mode active

interface TwentyFiveGigE0/1/1

switchport mode trunk

channel-group 1 mode active

interface Port-channel1

switchport mode trunk

end

The default load balancing algorithm for Cisco Catalyst switches is either src-mac or src-dst-mixed-ip-port (the latter used on high end switches). The load balancing algorithm used for the etherchannel on Campus Gateway is src-dst-mixed-ip-port, so it is recommended to match it on the switch side as well. If non-Cisco switches are used, please consult the vendor documentation to make sure that this load balancing algorithm is used.

An example of a port-channel configuration for a customer-managed, Catalyst switch is below.

port-channel load-balance src-dst-mixed-ip-port ! interface TwentyFiveGigE 1/0/19 channel-group 1 mode active switchport mode trunk switchport trunk allowed vlan 2,3-10 interface TwentyFiveGigE 1/0/20 channel-group 1 mode active switchport mode trunk switchport trunk allowed vlan 2,3-10 interface PortChannel 1 switchport mode trunk switchport trunk allowed vlan 2,3-10

Similarly if you connect to a Catalyst switch monitored by Dashboard or a Meraki MS switch, use Dashboard to configure a port-channel on the ports connected to CG.

Note: While the default Campus Gateway and cloud-managed switch configurations allow all VLANs, the best practice is to prune to include only required VLANs. In the example above, the VLANs are pruned to only include VLANs 2 and 3-10 as those are VLANs required for the CG cluster.

For more detailed info on how to configure port-channel on cloud-managed, Cisco switches, please see here: https://documentation.meraki.com/General_Administration/Tools_and_Troubleshooting/Link_Aggregation_and_Load_Balancing

What if you connect the Campus Gateway with only one link?

This is not recommended in production, but it can happen in the lab during testing. Even if only one port on Campus Gateway is connected, on the switch side you must create a port-channel with one port. If you don’t, the CG port will not be UP and will not pass traffic as it fails to negotiate LACP packets.

To configure a port-channel with just one physical port:

- In IOS-XE, create the port-channel interface as shown in the example above and add the channel-group command on the connected physical port.

- In Dashboard for MS or cloud-managed Catalyst switch, you need at least to select two ports to be able to aggregate them in a port-channel; select the connected port and another port that is not connected and click on the Aggregate tab as shown below:

How does Campus Gateway reach out to Dashboard initially?

After initial boot up, Campus Gateway will leverage the Meraki auto-uplink logic to determine one active VLAN which it can use to connect to Dashboard. The CG will detect the available VLANs which are on the trunk port and choose one with internet connectivity. Campus Gateway leverages DHCP on the selected VLAN to get and IP address and automatically connect to Dashboard

In order for Campus Gateway and the auto-uplink to work, the user needs to make sure:

- The uplink switch is configured accordingly (port-channel and trunk)

- DHCP is active on at least one VLAN and with a default gateway and DNS server provider

- Connectivity to dashboard is allowed on the active VLAN

Once Campus Gateway is connected to Dashboard and configured with the required connectivity information, Campus Gateway fetches the onboarding configuration and will use the IP address information pushed from Dashboard to connect to Dashboard from now on. For more information regarding the onboarding, please see the Adding Campus Gateway to a Network.

Adding Campus Gateway to a Network

Note: Adding CGs to a network using the Meraki app is currently not supported.

High Availability with Campus Gateway Clusters

When adding Campus Gateway to a network, Campus Gateway will be added as part of a cluster. A cluster represents a group of CGs with connectivity to a common set of client VLANs.

At first release, a cluster will consist of a single Campus Gateway in a standalone deployment or two Campus Gateways in a high availability deployment.

A cluster of two Campus Gateways will be a high availability group which will provide both linear scalability and fault tolerance for Campus Gateway networks. The Campus Gateway clusters will operate in an Active-Active deployment with APs load balanced between the CGs in the cluster. The tunnel discovery and load distribution of the APs will be initiated by Dashboard.

This means that every CG will be used to terminate the data plane tunnels and switch the client VLANs to the upstream switch. This allows the throughput to increase to up to 200 Gbps since each CG is capable of up to 100 Gbps of throughput.

Note: Be aware that the scale of a fully redundant cluster remains at 5000 APs and 50000 clients. If one of the Campus Gateways in the cluster fails, all the APs and connected clients will then failover to the remaining Campus Gateway.

Active-Active clustering will be automatically configured by Dashboard whenever there are two Campus Gateways in to cluster. The members of a cluster will also be configured as mobility peers, so clients will be able to roam across APs tunneling to different primary Campus Gateways in the cluster seamlessly.

Onboarding Flow

Adding a Campus Gateway to a network running code earlier than the MR31.2.X release train will result in the APs automatically upgrading and reloading to MR31.2.X. It is recommended to add the Campus Gateway to the network during planned network outages times.

Adding Campus Gateway to a network will trigger a new onboarding flow. This flow will have the user configure the necessary cluster settings. Users can add a single Campus Gateway at a time or both Campus Gateway units at the same time. The onboarding flow will be the same regardless.

After connecting the Campus Gateway(s) to the upstream switch, power on the CG(s) and wait for the box to power on and connect to Dashboard.

Once the onboarding flow is completed, it will take about 5 minutes for the new configurations to be applied to the CG, given the CG is already connected to Dashboard, which is indicated by the LED indicators on the front of the box.

If the CG fails to connect to Dashboard, check the Local Status Page for connectivity or initial port configuration issues. The steps for this can be found in the Local Status Page section.

Step 1: In either the Network-wide > Configure > Add Devices or Organization > Configure > Inventory pages, select either a single Campus Gateway or both Campus Gateways and select Add to network. This can be either a new network or an existing network.

Note: Adding the CG to a network will not be supported in the legacy inventory page nor legacy licensing page. This will be represented on the page if View new version is shown. If using Per-Device Licensing, the CG will not be able to be added to the network regardless of legacy or new version of the pages.

Step 2: Adding a Campus Gateway to a Meraki Network will trigger the onboarding flow to add the CG to a cluster. First, the CG will need to either be added to an existing network or a new network.

a. Existing Network: Select the existing network name.

Note: All networks will be populated in the existing networks list. The existing network chosen will automatically be configured to include the Wireless and Campus Gateway network type.

b. New Network: Input the new network name and select the Network configuration to use if needed.

Step 3: Once the network is assigned, the Management and AP Tunnel Interfaces need to be configured. These will be the only two L3 interfaces that can be configured on Campus Gateway.

- Management Interface: This is the interface to the external network that is used to talk to Meraki Dashboard and build the Meraki Tunnel.This interface can be assigned an IP via DHCP or static

- AP Tunnel Interface: This is the interface to the internal network that is used to terminate the VXLAN tunnels from the APs as well as to source traffic to network services, like RADIUS, syslog, etc. This interface must be assigned an IP statically. This is needed as the AP tunnel interface is the tunnel termination point for the APs and cannot change dynamically without creating a network disruption.

These can be deployed as a single L3 interface (share the same IP address) or as dual L3 interfaces:

- Single L3 Interface Deployment: Both the Management and AP Tunnel are the same L3 interface, meaning that they share the same VLAN and IP address. This requires the IP address assignment to be static. This is easier to deploy as only one VLAN and subnet needs to be configured on the box.

- Dual L3 Interface Deployment: The Management and AP Tunnel Interfaces are separate interfaces on different VLANs and subnets. The Management Interface’s IP address can either be assigned statically or via DHCP. The AP Tunnel Interface must have an IP address assigned statically. This model has the benefit of keeping internet and Dashboard traffic separated from internal traffic.

When using Dual L3 Interface Deployment, access points that tunnel to campus gateway cannot be in the same VLAN/subnet as the campus gateway's management interface.

Regardless of the deployment type chosen, the Management Interface will need to be configured, using DHCP or static assignment (if using a single L3 interface). Below is an example of a Static IP configuration.

If the AP Tunnel Interface will share the same L3 interface as the Management Interface, leave Manually configure AP tunnel interface for this cluster untoggled.

If the AP Tunnel Interface will be a different L3 interface, toggle Manually configure AP tunnel interface for this cluster. In this case, specify a different VLAN/subnet for this interface. An example configuration is shown below.

Step 4: Configure the settings for the Campus Gateway cluster.

a. Cluster name: This will be used when referring to and selecting the CG cluster to tunnel SSIDs to.

b. Uplink port native VLAN: This should match what is configured on the uplink switch. Any untagged received traffic will be considered belonging to this VLAN. Any traffic sent on this VLAN will be sent untagged, without a 802.1Q tag.

This field accepts only one VLAN number. If left blank, the default value will be VLAN 1. The VLAN used can be in the range 1-4094, excluding 1002-1005.

c. Uplink port client VLAN: This defines the list of VLANs which clients will be assigned and that will be allowed on the trunk interface to the upstream switch. If a client VLAN is not defined here, the client traffic will be dropped.

This field accepts multiple VLAN numbers and ranges. The VLANs used can be in the range 1-4094, excluding 1002-1005.

Step 5: Review the configurations and click Finish to deploy the Campus Gateway to the network.

Note: At first release, cluster deletion will not be supported. Once a cluster is created for the network, this cluster will need to be used for future configurations in the network. In order to delete a cluster, the network will also have to be deleted as well.

Step 6: The Campus Gateway Clusters page displays a list of all CGs added to the cluster, along with the cluster configurations. Once SSIDs are configured to tunnel to the cluster, they will appear in the SSID section.

Step 7: To change the cluster settings, click on the Edit button.

Slide-out menu to edit will pop-up:

a. Management VLAN and Interface for each CG

b. AP Tunnel Interface for each CG

c. Uplink port native VLAN

d. Uplink port client VLANs

Removing a Campus Gateway from a Meraki Network

Navigate to Wireless > Campus Gateway (MCG) > Campus Gateways, select the campus gateway(s) you want to remove from the network and click Remove.

If you get an alert stating Deleting all campus gateways from the cluster that is associated with SSID(s) is not allowed, perform these steps before removing the campus gateway device.

Navigate to Wireless > Configure > SSIDs, and check which SSIDs are configured to tunnel the traffic to a campus gateway cluster, including disabled SSIDs. In this example, *mcg-psk is tunneling the traffic to the campus gateway cluster SFO-Cluster-1_change.

From the same page, click edit settings, then navigate to Client IP and VLAN and select a different method, such as NAT mode or Bridged, then Save.

Once there are no SSIDs configured as Tunnel mode with Campus Gateway, you can remove the campus gateway from the Meraki network.

Onboarding via the Meraki API

To onboard Campus Gateway to a Meraki Network via the API, the Campus Gateway will need to be:

- Claimed to the network using the Claim Network Devices API operation

- Added to a Campus Gateway cluster using either the:

a. Create Network Campus Gateway Cluster API operation to add the Campus Gateway to a new cluster

b. Update Network Campus Gateway Cluster API operation to add the Campus Gateway to an existing cluster

If the Campus Gateway is only added to the network via the Claim Network Devices API operation, the Campus Gateway will not show up in the network nor the Campus Gateway Cluster page. It needs to be added to a Campus Gateway Cluster.

Access Point to Campus Gateway Assignment

When APs are configured to tunnel to the Campus Gateway clusters, the APs will get a list of Campus Gateways from Dashboard. At first release, APs that belong to the same subnet are assigned to the same CG. Depending on the number of subnets and APs in those subnets, the load balance may not be split evenly between the two CG in the cluster. The APs will maintain tunnels to the primary and backup CG. For APs in different subnets, the primary and backup CG will be different. For example, CG1 will be primary and CG2 will be backup for AP1 and vice versa for AP2.

In the event that the second CG in the cluster is added after the initial deployment of the first CG and APs (traffic is already being tunneled), the CGs will form the cluster but not load balance the APs. The APs will continue to tunnel traffic to first CG until the maintenance window. This will be the same window used for firmware upgrades defined in Network-wide > General.

How do I monitor which Campus Gateway an AP is tunneling to?

In the Campus Gateway Clusters page, there is a tab called Tunneled APs. Here if both the Tunnel status is up, meaning both QUIC and VXLAN tunnels are up between the AP and current Campus Gateway, the Campus gateway field will be populated with the current Campus Gateway it is tunneling to.

Redundancy Port

The Redundancy Port (RP) is a dedicated port on the CG chassis responsible for syncing client states between chassis. The CG has two options for the RP port on the chassis:

- 1x 1G RJ45 copper port

- 1x 1G/10G SFP/SFP+ fiber port.

Either port can be used for HA. Please see the Supported SFP Modules section for the modules supported.

Note: Do not use both RP ports (RJ-45 and SFP) together. This is not a supported configuration and may lead to unexpected issues.

If the Redundancy Port (RP) link is connected for the Primary and Backup Campus Gateways in the cluster, they will sync client states over the RP link. This will be used for fast keepalives (every 100ms). Keeping the CGs in sync allows the data path to be kept hot with backup tunnels kept alive as well. This allows for failover times of 1-2 seconds if one of the CGs in the cluster fails only if the RP link between CGs is connected. Without the RP link, the failover time may increase.

The RP link can be connected in one of two methods:

-

Back-to-Back: In this way, the RP port on both CGs are directly connected back-to-back with no intermediate switches in between. This is the recommended method as this is also the simplest method as well. By directly connecting the CGs, the causes of the failover will either be either of the CG chassis failing or the RP link between the CGs failing. However, this will have the downside of limiting how far apart the CGs can be away from each other as the max distance will be based on the distance supported by the SFP/SFP+ modules.

-

Dedicated L2 VLAN with Intermediate Switches: In this method, the RP port on each CG will be connected to a dedicated port on their respective upstream switch. The connections must also share their own dedicated L2 VLAN for connectivity.

If the CGs are in separate data centers, the VLAN must be spanned across the data centers. This method has the advantage of no longer being restricted to the max distance supported by the SFP/SFP+ modules. However, this will add more complexity into the deployment. Not only will the deployment have to consider how to configure and span the necessary VLANs, but also, in the event of a failover, the intermediate switches and their respective connections will now also have to be considered along with the status of the CGs.

Regardless of how the RP link is deployed, the link between the two Campus Gateways must meet the following requirements:

- RP Link Latency < 80 ms

- Bandwidth > 60 Mbps

- MTU ≥ 1500B

Campus Gateway Cluster Failure Detection Behavior

Based on the failure type, the amount of downtime will vary.

Access Point Tunnels Down due to network failure

Between the access points and Campus Gateways, there will be regular keepalives sent for both tunnels, QUIC and VXLAN, to check the status of the tunnel connectivity between them.

-

QUIC Tunnel

-

The keepalives will be sent only if the AP has not received any traffic from the CG for 10 seconds. These keepalives will then be sent in 10 second intervals or until the CG sends traffic to the AP (when connection is idle)

-

In the event of a keepalive failure, there will be retries after probe timeout with exponential back-off until the max idle time is reached (16 seconds)

-

-

VXLAN Tunnel

-

The keepalives (BFD echo) will be sent every 5 seconds.

-

In the event that an echo is missed, there will be four echo retries sent in 1 second intervals.

-

The time taken with five echos that are missed will be 9 seconds of downtime.

-

In the case that there is a keepalive failure for one of the tunnels, then both tunnels will be brought down. Because of this, AP tunnel down will take between 9 to 16 seconds to detect failure event and start a failover.

Campus Gateway Chassis Failure

In the event of a Campus Gateway chassis failure, which can be caused by a crash, power down, etc., a stateful and quick failover (<1-2 seconds) can only be accomplished if the RP is connected betwen the two Campus Gateways. This allows the client states to be synced between the chassis, so the data path can be kept hot on APs' backup Campus Gateway.

The keepalives will be sent every 100 ms, and a failure event will be considered if five RP keepalives and one ping via the AP Tunnel Interface to the other Campus Gateway's AP Tunnel Interface are missed. Once that happens, the backup Campus Gateway will send a message to the APs to change the state of their VXLAN tunnel to the backup Campus Gateway from Blocked to Allowed. The time it takes for the APs to change the state of their VXLAN tunnel to the backup Campus Gateway and continue passing client traffic will take less than 1 to 2 seconds.

Campus Gateway Network Failure

If Campus Gateway loses connectivity to its gateway, the gateway failure detection will take 8 seconds. This is because four consecutive ARP and four consecutive ICMP echos need to be missed.

When there is a network failure, there will be longer detection times. Hence, there will be slower failover times, leading to longer downtimes.

Campus Gateway Cluster High Availability Behavior

The below table captures the behavior for various scenarios in a cluster deployment.

| RP Link State | AP Tunnel Interface Link | Gateway state at Primary CG | Gateway state at Preferred Backup CG | Action | |

|---|---|---|---|---|---|

|

1 |

UP |

UP |

UP |

UP |

AP joins the primary and preferred backup CG. Clients are anchored on the primary. Client records are replicated to the preferred backup. Client state is plumbed to the data plane in the preferred backup and data plane is hot. |

|

2 |

UP |

UP |

UP |

DOWN |

AP joins the primary CG. Clients are anchored on the primary. Client records are replicated to the preferred backup. The client states are not plumbed to the data plane in the preferred backup as the AP has not joined it. |

|

3 |

UP |

UP |

DOWN |

UP |

When the gateway reachability is lost at the primary CG, the preferred backup CG notifies the event to the AP. The AP unblocks the VXLAN tunnel with the preferred backup CG and GARPs are sent out for the plumbed clients. The AP re-anchors the clients to the preferred backup, and the clients become active. Gateway reachability failure detection should happen faster than the AP detecting loss of connectivity to the primary CG. |

|

4 |

UP |

UP |

DOWN |

DOWN |

AP cannot join the primary nor the preferred backup. There will be no client records in either CG. |

|

5 |

UP |

DOWN |

UP |

UP |

AP joins the primary and preferred backup CG. Clients are anchored on the primary. Client records are replicated to the preferred backup. Client state is plumbed to the data plane in the preferred backup and data plane is hot. Note: Since AP Tunnel Interface link is down, Dual Master Detection (DMD) is not possible at this time if the RP link goes down. |

|

6 |

UP |

DOWN |

UP |

DOWN |

AP joins the primary CG. Clients are anchored on the primary. Client records are replicated to the preferred backup. Client state is not plumbed to the data plane in the preferred backup as the AP has not joined it. Note: Since AP Tunnel Interface link is down, Dual Primary Detection is not possible at this time if the RP link goes down. |

|

7 |

UP |

DOWN |

DOWN |

UP |

When the gateway reachability is lost at the primary CG, preferred backup CG notifies the event to the AP. AP unblocks the VXLAN tunnel with the preferred backup CG and GARPs are sent out for plumbed clients. The AP re-anchors the clients to the preferred backup and clients become active. Gateway reachability failure detection should happen faster than the AP detecting loss of connectivity to the primary CG. Note: Since AP Tunnel Interface link is down, Dual Primary Detection is not possible at this time if the RP link goes down. |

|

8 |

UP |

DOWN |

DOWN |

DOWN |

AP cannot join the primary and the preferred backup. There will be no client records in either CG. |

|

9 |

DOWN |

UP |

UP |

UP |

AP joins the primary and preferred backup CG. Clients are anchored on the primary. Client records are not replicated to the preferred backup since the RP link is down. |

|

10 |

DOWN |

UP |

UP |

DOWN |

AP joins the primary CG. Clients are anchored on the primary. Client records are not replicated to the preferred backup since the RP link is down. |

|

11 |

DOWN |

UP |

DOWN |

UP |

When the gateway reachability is lost at the primary CG, the preferred backup CG notifies the event to the AP. The AP unblocks the VXLAN tunnel with the preferred backup CG. The AP re-anchors the clients to the preferred backup and client state is created and plumbed to the data plane (this is similar to the client joining afresh). |

|

12 |

DOWN |

UP |

DOWN |

DOWN |

AP cannot join the primary nor the preferred backup. There will be no client records in either CG. |

|

13 |

DOWN |

DOWN |

UP |

UP |

Double fault. Since the CGs cannot communicate with each other, each node assumes the other is down. But the AP will be able to connect to the primary as well as the preferred backup. The AP may anchor the clients on the primary, but there will be no replication of records to the preferred backup. Note: When both the RP and AP Tunnel Interface links go down, the CG will send a Peer Node Down event to the AP. Because of this, the AP may see a conflict, i.e. Peer Node Down event, yet its QUIC tunnel with the primary is UP. |

|

14 |

DOWN |

DOWN |

UP |

DOWN |

Double fault. Since the CGs cannot communicate with each other, each node assumes the other is down. But the AP will be able to connect to the primary. The AP will anchor the clients on the primary, but there will be no replication of records to the preferred backup. |

|

15 |

DOWN |

DOWN |

DOWN |

UP |

Double fault. Since the CGs cannot communicate with each other, each node assumes the other is down. But the AP will be able to connect to the preferred backup. The AP will anchor the clients on the preferred backup. Note: When both the RP and AP Tunnel Interface links go down, the CG will send a Peer Node Down event to the AP. |

|

16 |

DOWN |

DOWN |

DOWN |

DOWN |

AP cannot join the primary and the preferred backup. There will be no client records in either CG. |

Recommended Cluster Deployment Topology

Two Campus Gateway Cluster in one DC

In this topology, it is recommended to cross-connect the uplinks to a single logical distribution switch (vPC, VSL, etc.). In this way, this prioritizes redundancy while still maintaining adequate throughput in the event of a switch failure. If max throughput is required, each Campus Gateway can connect all the their uplinks to unique switches in the stack. For example, CG1 connects to SW1 and CG2 connects to SW2. Please see the Recommended Switch Topology section for more information.

The RP ports can be directly connected or connected through the upstream switch with L2 VLANs.

On the upstream switch side, there will need to be two unique EtherChannel configured: one for each Campus Gateway. They will need to be configured to use LACP and have trunking enabled with the corresponding list of allowed VLANs. Please see the Port-Channel Configuration section for example configurations.

The upstream switch should have the appropriate scale for the number of clients, considering the ARP and MAC address table size for the switch platform. Please see the Recommended Upstream Switch section.

Two Campus Gateway Cluster across DCs

This deployment topology is recommended when increased redundancy is needed. For example, the cluster is split among two different buildings, areas of the Data Center on different power distributions, or different Data Centers

Inter-DC connections are required to be Layer 2. This allows Management VLANs, client VLANs, and RP VLAN to be shared.

If distance allow it, the two Campus Gateways RP link can be directly connected.

Local Status Page

If connectivity between Campus Gateway and Dashboard is lost and/or cannot be established, the Local Status Page (LSP) can be used to manually configure parameters like the uplink ports’ VLAN, IP address or DNS settings to restore the connectivity.

This can happen for multiple reasons:

-

DHCP is not available on the active VLAN

-

Info provided by DHCP are not correct (missing DNS, wrong default gateway, etc.)

-

Connectivity to Meraki Dashboard is not there, etc.

There is no console access on Campus Gateway, so the LSP is used to connect to the box. This is mainly used for out-of-band uplink configuration and overall system health check.

If Campus Gateway is unable to reach out to Dashboard, even if LSP is used, please reach out to Meraki Support.

Connecting to the Local Status Page

Directly connecting to Campus Gateway using the Service Port

Step 1: Plug in the computer to the Service Port (SP port). This will assign an IP address in the range 198.18.0.X.

Note: Please refer to the Front View section for where to find the SP port.

Step 2: Navigate to https://mcg.meraki.com or directly to https://198.18.0.1 and enter the credentials.

The credentials for Campus Gateway devices that have never connected to a Meraki network or that went through a factory reset are:

-

Username: admin

-

Password: Cloud ID (Meraki Serial Number) of the CG

Note: The cloud ID can be found in the shipment email from Cisco as well as physically on the chassis of the CG.

After the CGs devices connect for the first time to a Meraki network, they automatically get their LSP credentials updated to a strong password. Please see the Changing Log-In Credentials section in the Using the Cisco Meraki Device Local Status Page guide for more details.

Connecting to Campus Gateway using the Management or AP Tunnel Interface

Step 1: Navigate to IP address of the Management or AP Tunnel Interface of Campus Gateway and enter the credentials. For example, if the AP Tunnel Interface is using the IP address 10.70.2.2, the LSP will be accessed using https://10.70.2.2.

The credentials for Campus Gateway devices that have never connected to a Meraki network or that went through a factory reset are:

-

Username: admin

-

Password: Cloud ID (Meraki Serial Number) of the CG

Note: The cloud ID can be found in the shipment email from Cisco as well as physically on the chassis of the CG.

After the CGs devices connect for the first time to a Meraki network, they automatically get their LSP credentials updated to a strong password. Please see the Changing Log-In Credentials section in the Using the Cisco Meraki Device Local Status Page guide for more details.

Health Summary

On the home page of the LSP, the user gets an overview of the status of the key Campus Gateway components.

-

Dashboard Connectivity: Quickly view the connection status to Dashboard

-

CG Details: Get key information about the CG, such as Meraki Network, CG chassis name, and MAC Address.

-

Client Connection: IP address of the client used to access the LSP

-

Device Summary: The total number of APs tunneling to the CG and the number of clients connected

-

System Resources: View the CPU and Memory utilization of the CG as well as the temperature of the chassis. The status of each power supply will also be shown.

Configuration

Uplink Configuration

The user can configure the following uplink settings:

-

VLAN

-

IP Address

-

Gateway

-

DNS server(s)

The Campus Gateway will use these to connect to Dashboard if DHCP does not work or is not configured. As all the ports are configured in a single L2 port-channel, this will configure the L3 VLAN interface (SVI) for management connectivity.

Note: If Campus Gateway is already provisioned and is using the single interface deployment, where both the Management and AP Tunnel Interface share the same IP address, the Uplink Configuration will not be able to be changed as this would also change the AP Tunnel Interface. This would lead to downtime in the wireless network as the APs would no longer be able to tunnel to the Campus Gateway's AP Tunnel Interface.

Adaptive Policy

In the event the Adaptive Policy is configured in the network and Campus Gateway is unable to connect to the upstream network and Dashboard due to Campus Gateway not having infrastructure Adaptive Policy enabled (uplink port is not configured with the correct SGT), the LSP can be used to enable uplink traffic to be tagged with the correct SGT, typically a value of 2.

Note: The SGT value can be found in either the upstream switch port configurations or, for Cisco cloud-managed devices, in Organization > Adaptive policy > Groups page under Infrastructure.

Switchport Details

The Admin and Operational status of all the ports as well as their VLAN configurations are shown. This can be used to verify the pushed configuration from Meraki Dashboard if any issues appear.

Access Points Page

The list of all the APs tunneling to the Campus Gateway will be shown as well as their respective MAC and IP addresses.

Clients Page

The list of all the clients that connect to the centralized SSIDs as well as which SSIDs and VLANs the clients are connected to. Anything other than the Run state for client state will indicate an issue in client connectivity.

Support Bundle Creation

In the event of any issues on the Campus Gateway, Meraki Support may ask for a support bundle to be generated on the CG for debugging purposes.

Step 1: Click Generate to begin the Support Bundle creation process. This will take a few minutes to complete.

Step 2: Once completed, click Download to get the generated tar.gz file and share this with Meraki Support.

Reset and Reload Campus Gateway

Campus Gateway can quickly be reloaded or completely factory reset using the LSP. Click on the respective button to do so.

SSID Creation

Tunneling to a Campus Gateway Cluster is a per-SSID configuration. For a campus greenfield Meraki deployment, it is expected to have all SSIDs centrally tunneled. Each AP will form a VXLAN tunnel to the CG, which all SSIDs that tunnel to a cluster will share. This creates an L2 overlay that will allow the clients to roam seamlessly across all APs in the campus. Also, APs can broadcast both centrally tunneled and locally bridged SSIDs concurrently as well.

If connectivity between the Campus Gateway(s) and the APs is lost, the centrally tunneled SSIDs will stop broadcasting. Locally bridged SSIDs will remain unaffected.

In the example above, when clients associate to the tunneled SSID, they will be mapped to a VLAN, in this case VLAN 4, that is defined centrally at the CG. The client VLAN is carried to the distribution or core switch, which acts as the default gateway. Because the client VLAN only leaves between the CG and uplink switch, it can be made as large as needed (ex. /21) since the broadcast domain is confined.

Configuring tunneling for the SSIDs will apply to all supported SSID configurations:

-

Passphrase (WPA2 PSK, WPA3 SAE and iPSK without RADIUS)

-

RADIUS based (802.1X, MAB, iPSK with RADIUS)

-

Open / OWE

-

WPN

-

Splash Page

Tunneling is configured as follows:

Step 1: Go to Wireless > Configure > Access Policy and select the SSID to be tunneled.

Step 2: In the Client IP and VLAN section, select External DHCP server assigned and the Tunneled option. There will be a new option called Tunnel mode with Campus Gateway (MCG). Select this and choose the CG cluster created.

If required, this will also be where mDNS gateway is enabled. For more information, see Configuring mDNS Gateway section.

Step 3: Set the client VLAN in the VLAN tagging section. This can be either the VLAN ID or Named VLAN. The VLAN configured must be defined in the list of Uplink port client VLANs configured on the CG cluster.

For more information on configuring Named VLANs and VLAN profiles, please refer to the guide here: https://documentation.meraki.com/General_Administration/Cross-Platform_Content/VLAN_Profiles

Note: If the user will be leveraging VLAN override, then the VLAN information here can be left untagged.

For the below cases, client traffic will be sent out the CG untagged and tagged with the native VLAN configured on the uplink switch port.

-

VLAN tagging is disabled (no VLAN assigned)

-

VLAN ID is set to 0

-

VLAN is set to the Native VLAN of CG

Note: If a user configures a VLAN that is not in the list of Uplink port client VLANs at CG, the traffic will be dropped.

RADIUS-based SSID and Campus Gateway as a RADIUS Proxy

For the case of RADIUS-based SSIDs, Campus Gateway will act as a RADIUS Proxy, further optimizing the scale and manageability of large scale wireless deployments.

In a distributed Cisco cloud-managed deployment, all the APs had to be added as a Network Access Server (NAS) to the RADIUS server. This resulted in having to potentially add hundreds, if not thousands, of devices to the RADIUS server, leading to complex management of the RADIUS server.

By making the CG act as a RADIUS proxy, only the IP of the CG now needs to be added as a NAS, drastically simplifying RADIUS management. The APs will still serve as the authenticator and manage the clients’ AAA sessions, but now the APs will send all RADIUS traffic to the CG. The CG will send the traffic to the RADIUS server and relay the RADIUS response to the AP. All the RADIUS messages will be sent to the CG via the VXLAN tunnels. RADIUS servers specified for each SSID will be used in order of priority when configured. CG acts as Radius Proxy only for the tunneled SSIDs. For locally bridged SSIDs, the AP talks to AAA server directly, as today.

Note: The AAA traffic is sent via VXLAN tunnel as this allows customers to take packet captures at the AP and analyze the exchange between AP and RADIUS (QUIC is always encrypted)

Change of Authorization (CoA) is supported as well as being deployed together with fast and secure roaming (802.11r/OKC).

Note: If you use multiple RADIUS servers and you want to load balance the authentications in a round robin fashion, please contact Meraki Support.

For CoA, ensure the RADIUS server is configured to use UDP port 1700 as the Campus Gateway expects the CoA packets to come on UDP port 1700. CoA requests will be dropped if the packets are received on a different port.

Client VLAN Assignment

When the client associates to the APs using 802.1X, or any other RADIUS based authentication, the AP will encapsulate the RADIUS messages in VXLAN to send it to Campus Gateway. Once AAA returns the VLAN (e.g. VLAN 20) in Access-Accept message to the CG, and this gets forwarded back to the AP.

The AAA server can use the following to return the VLAN name / group / ID:

-

Airespace-Interface Name

-

Tunnel-Type (Attr. 64)

-

Tunnel-Medium-Type (Attr. 65)

-

Tunnel-Private-Group-ID (Attr. 81)

The AP stores the client VLAN and sets it in the VXLAN header when forwarding client traffic. The CG then bridges the traffic onto the VLAN. Please note that the VLAN returned by the AAA server needs to be included in the allowed VLAN list on the CG, which is defined at the cluster level. Otherwise, the traffic will be dropped at CG.

Client VLAN Assignment Priority

There are different methods to assign a VLAN, and if multiple methods are applied, the priority in descending priority order is as follows:

-

Roaming Client (saved VLAN from first association)

-

Per-client Group policy

-

Per-device type Group policy

-

RADIUS assigned VLAN

-

SSID defined VLAN

SSID Switching Type Co-Existence

For brownfield Cisco cloud-managed wireless deployments, there will be existing SSIDs that are locally switched at the APs. It is not expected that all these SSIDs will now be centralized at Campus Gateway all at once. Migration will happen in phases. Thus, the coexistence of locally bridged and centrally tunneled SSIDs will be supported on the same APs. Clients can connect to locally bridged SSIDs while other clients can connect to the centrally tunneled SSIDs at the same time.

Adaptive Policy

Adaptive Policy will remain as it has before where it is configured per Meraki Network and applied at the AP. Dashboard will push the configurations for Security Group Tags (SGTs) and Security Group Access Control Lists (SGACL) policies to the APs. The APs learns the local clients’ SGT via the RADIUS Access-Accept response message during client authentication or via the static configuration via Dashboard.

Upstream at AP level, the AP will insert the SGT in the segment ID (SID or policy) in the VXLAN header. The CG will use this when it bridges the traffic by inserting the received SGT into the CMD header of the 802.1q packet. The policy is then applied at the destination device (switch or AP).

Downstream at Campus Gateway, it reads the SGT from the CMD header, and it copies into the SID in the VXLAN header. The policy is applied at the AP. The source SGT is received in VXLAN header while the destination SGT is known as it belongs to the locally managed client. From there, the SGT ACL policy is applied.

For CG, enabling Adaptive Policy will occur in the following scenarios:

- Campus Gateway is added to a network with Adaptive Policy already enabled

- Adaptive Policy is enabled on a network with Campus Gateway already added

Configuring Adaptive Policy on Campus Gateway

Campus Gateway is added to a network with Adaptive Policy already enabled

When Campus Gateway is added to a network which already has Adaptive Policy already enabled, it will not have the necessary configurations which enable the infrastructure Adaptive Policy. Specifically, the configs to enable the inline SGTs on the CG and set the uplink ports to tagged with SGT 2 (the standard infrastructure to infrastructure tag) will not be present by default.

If the uplink switch ports are configured for infrastructure Adaptive Policy, the CG will not have connectivity to the network as these configs are not present.

To provide connectivity and enable Adaptive Policy, either the upstream switch can be modified to temporarily disable infrastructure Adaptive Policy on the switch ports connected to CG or the Local Status Page of the CG can be used to enable Adaptive Policy on the uplink ports.

Modifying the Upstream Switch

-

Temporarily disable infrastructure Adaptive Policy on the uplink switch ports connected to the CG. This will allow the CG to download its configurations and enable Adaptive Policy on its uplink ports. Because Adaptive Policy had been disabled on the uplink switch ports, the CG will temporarily lose connectivity.

-

Re-enable infrastructure Adaptive Policy on the uplink switch ports connected to the CG. This will restore the connectivity for the CG.

For steps on how to enable/disable infrastructure Adaptive Policy on the uplink switch ports, please see the following guides:

-

On-Prem managed Catalyst Switches: https://documentation.meraki.com/General_Administration/Cross-Platform_Content/Deploying_Catalyst_Switches_as_the_Core_of_a_Meraki_Adaptive_Policy_Switching_Network

Cloud-Managed Catalyst Switches / Meraki MS Switches: https://documentation.meraki.com/General_Administration/Cross-Platform_Content/Adaptive_Policy_MS_Configuration_Guide

Using the Local Status Page of Campus Gateway

-

Log onto the Local Status Page of the CG

-

Go to Configuration and go to the Adaptive Policy section

- Set the status to Enabled and set the Source Group Tag to match what is configured on the uplink switch port. This will typically be set to 2 for infrastructure to infrastructure communication.

Note: The SGT value can be found in either the upstream switch port configurations or, for Cisco cloud-managed devices, in Organization > Adaptive policy > Groups page under Infrastructure.

- Click Save Changes. The CG will get connectivity to Dashboard and download the configurations.

Adaptive Policy is enabled on a network with Campus Gateway already added

For more information on how to enable Adaptive Policy for a network, please see the guide here: https://documentation.meraki.com/General_Administration/Cross-Platform_Content/Adaptive_Policy_Configuration_Guide

-

Enable Adaptive Policy for the network. For steps on how to enable, please see the guide here: https://documentation.meraki.com/General_Administration/Cross-Platform_Content/Adaptive_Policy_Configuration_Guide

-

After enabling Adaptive Policy, the CG will receive the configuration to enable infrastructure Adaptive Policy on its uplink ports.

Note: This may result in the CG temporarily losing connectivity. To restore connectivity, configure the port on the uplink switch port to enable infrastructure Adaptive Policy. From this point onwards, CG will stay connected.

Quality of Service (QoS) Best Practices

Control Plane (CP) QoS

The QUIC Tunnel traffic is marked with DSCP = CS6.

Data Plane (DP) QoS

For client traffic, Network Based Application Recognition (NBAR) runs on the AP, recognizing and classifying traffic at the AP level. By doing so, any client QoS policy will still be implemented at the AP.

Campus Gateway supports the “DSCP trust” QoS model. It means that upstream at the AP and downstream at the CG, the DSCP value in the VXLAN header (not the Layer 2 Value: UP or CoS) is used to process the QoS info in the packet and apply the policy.

The above image illustrates that the DSCP value is transferred unchanged, based on the assumption that no additional Quality of Service (QoS) policies are being implemented.

If a policy is in place, (e.g. rate limiting or remarking of DSCP), the AP applies the policy. For upstream client DSCP at the AP level, the DSCP is copied in the outer header. For downstream traffic, the policy is applied only at the AP. The CG doesn’t enforce any QoS policy as it trusts the client DSCP.

For how to configure Wireless QoS, please see the guide here: https://documentation.meraki.com/MR/Wi-Fi_Basics_and_Best_Practices/Wireless_QoS_and_Fast_Lane

mDNS Gateway and WPN

How is mDNS traffic handled by Campus Gateway?

At launch, Campus Gateway will only support two mDNS modes:

- mDNS Drop

- All mDNS packets sourced from clients will be dropped by Campus Gateway

- If mDNS Gateway is not enabled, Campus Gateway will drop all mDNS packets.

- mDNS Gateway

- mDNS packets sourced from clients on Campus Gateway's wireless client VLANs are cached by Campus Gateway

- Campus Gateway will unicast back the cached mDNS services in response to an mDNS query from a client connected to the Campus Gateway tunneled SSIDs

By default, Campus Gateway will be in mDNS drop mode. In order for any mDNS services to be shared across VLANs, mDNS gateway will need to be enabled for the SSID tunneling to Campus Gateway.

Currently, mDNS bridging functionality will not be available for Campus Gateway.

Why mDNS gateway?

The mDNS gateway provides an efficient and scalable way to share mDNS services between clients across different VLANs. Rather than continuously broadcasting the mDNS services across the air, which consumes and clogs up the air, the mDNS services are cached by Campus Gateway. The CG will unicast back the response to an mDNS query by a client. Now, mDNS services are only sent back to clients that request it without having to broadcast it over the air.

When the client advertises its mDNS capabilities, the access point will encapsulate this within the VXLAN packet. Campus Gateway will take the packet and de-encapsulate it. When it receives the mDNS payload, Campus Gateway will store the mDNS PNT. When a client does an mDNS query, Campus Gateway will unicast back the mDNS response payload to the client by encapsulating this information within the VXLAN tunnel as it sends it to the access point the client is connected to.

To better understand how the mDNS gateway function works, lets look at the example below. The querying client laptop is connected to the Corp SSID and placed on VLAN 10. The advertising client Printer A is connected to the IoT SSID and placed on VLAN 20. When Printer A advertises its printer capabilities using mDNS, Campus Gateway will cache the service in the mDNS gateway. When the client sends an mDNS query for available printers, Campus Gateway will see this request and unicast back all the mDNS printer services in its cache, allowing the client on VLAN 10 to discover Printer A on VLAN 20.

mDNS Gateway supports policy-based filtering on each WLAN. Policy-based filtering defines what types of mDNS services that should be learned and returned across VLANs. Only those allowed services will be cached and allowed to discovered across VLANs. Anything else will be dropped. For example, if a network admin only allows for AirPlay and Printers, only those discovered services will be cached. If an mDNS advertisement for Chromecast gets discovered, Campus Gateway will drop those mDNS packets since it is not an allowed service.

For Campus Gateway, the mDNS Gateway supports the following:

| Scale | 20K unique service records (# of service providers + # of services) |

|---|---|

| Time to Live (TTL) per service record | 4500 seconds |

Location Specific Services (LSS) Support

By default, location specific services (LSS) will be enabled whenever mDNS gateway is enabled and users will not be able to disable. LSS allows the mDNS gateway function to be further optimized and avoid any unnecessary mDNS traffic being sent over the air. It does this by allowing clients to only receive mDNS services that are from clients that are nearby to it.

The mDNS services which are returned to a client's mDNS query will only be mDNS services from clients connected to nearby access points to the access point which the client is associated to. This will be based on the 24 strongest neighbors for the associated to AP. Any other services that are discovered on other APs will be filtered out and not discovered by the client. This prevent clients from having to see and discover mDNS services from a completely different part of the campus, which they would not necessarily be connecting to in the first place.

For example, when Laptop A is querying for a printer which it can use, Printer A is associated to an AP which is in the list of 24 strongest neighbors of the AP that Laptop A is associated to, so it will be discovered by Laptop A. Laptop A does not discover Printer B since Printer B is associated to an AP not in the list of strongest neighbor APs.

Configuring mDNS Gateway

mDNS gateway is configured as follows:

Step 1: Go to Wireless > Configure > Access Policy and select the SSID to be tunneled.

Step 2: In the Client IP and VLAN > External DHCP server assigned > Tunneled > Tunnel mode with Campus Gateway (MCG), set the toggle for mDNS gateway to enable.

Step 3: Select all the necessary mDNS services which clients should be able to discover across VLANs.

What about WPN support?

In order for WPN to operate with mDNS traffic, mDNS gateway must be enabled. In this case, when Campus Gateway receives the mDNS advertisement from the client, it will also receive the UDN ID used for WPN after the VXLAN tunnel is encapsulated. The CG will store the mDNS service along with the corresponding UDN ID of the client that advertised the service. Now, when a client sends an mDNS query for a service, the CG will unicast back a response with the mDNS service only if the UDN ID of the querying client matches the UDN ID of the stored services in Campus Gateway.

To enable WPN on a tunneled SSID, please follow the steps outlined in the Configuration Section of the Wi-Fi Personal Network (WPN) guide.

Software Upgrades

The first software release version that supports Campus Gateway is MCG31.2.X and the corresponding AP version will be MR31.2.X.

It will be mandatory to keep the CG and AP firmware versions identical. For example, if the CG is updated to MCG31.2.5, the APs will need to run MR31.2.5 as well.

The CG and corresponding AP image firmware will be available in the Firmware Upgrades page. Choosing the CG image will automatically select the AP image to be upgraded as well.

For more information on how to upgrade the firmware for devices, please refer to the guide here: https://documentation.meraki.com/General_Administration/Firmware_Upgrades/Managing_Firmware_Upgrades

During the firmware upgrade, Dashboard will upgrade the CG first followed by the AP. Both options, Minimize upgrade time and Minimize client downtime, are supported for firmware upgrades.

For more information about the firmware upgrades options, please refer to the guide here: https://documentation.meraki.com/MR/Other_Topics/Access_Point_Firmware_Upgrade_Strategy

Minimize Upgrade Time

Choosing Minimize upgrade time will lead to a faster upgrade time at the expense of some downtime for the clients. During the upgrade, all the Campus Gateways in the cluster will upgrade to the new code version at the same time. Once they are done, all the APs in the network will be upgraded at the same time.

Minimize Client Downtime

Note: This requires the Campus Gateways to be in a cluster of 2. Standalone Campus Gateway deployments will not be supported for this upgrade strategy.

To use the Minimize client downtime upgrade strategy, there are some requirements that must be met.

- The Campus Gateways must be deployed in a cluster of 2. Standalone CG deployments will not be supported

- There must be adequate overlapping RF coverage between APs at the site, so if an AP reloads there is still enough RF coverage for clients