Private Cloud vMX Sizing Guide

This document outlines the VPN performance of the vMX platform on a UCS C220 M5 reference compute in specific scenarios mimicking common deployment use cases. When sizing a deployment, an analysis should be done of the anticipated traffic mix, expected tunnel count and each instance should be sized to 80% capacity or less. The following numbers were validated on MX14.39 firmware.

VPN tunnel count numbers below are the maximum possible VPN tunnels that can be established with minimal traffic passing through the tunnels simultaneously. Actual performance/tunnel counts will vary depending on the volume of traffic, type of traffic and packet size. The performance metrics on the organization > summary report (or via API) should be used as a deployment scales to understand when to scale out and add another instance

vMX Instance Results

|

Small |

Medium |

|

|

Per-vMX VPN Tunnels |

50 |

1000 |

|

Small |

Medium |

|

|

200 Mbps |

1000 Mbps |

|

|

700,000 |

1,400,000 |

|

|

2000 |

2000 |

|

These are maximum possible values based on ideal conditions on a single VM. Performance will vary in real-world environments and numbers may be lower.

*Unidirectional flow over 1 tunnel

**RFC 2544 Test Parameters (IXIA Breaking Point): 60s, UDP, 1 flow, unidirectional, 1415 byte frame size, 0.000% loss

Use Cases

K-12 School Environment

|

|

Small |

Medium |

| 470 Mbps | 850 Mbps | |

|

500 Tunnels Established |

- |

|

|

1000 Tunnels Established |

- |

802 Mbps |

*Traffic traversing 1 tunnel, remaining tunnels are simply maintained

Higher Education

|

|

Small |

Medium |

| 273 Mbps | 553 Mbps | |

|

500 Tunnels Established |

- |

|

|

1000 Tunnels Established |

- |

468 Mbps |

*Traffic traversing 1 tunnel, remaining tunnels are simply maintained

Retail Environment

|

|

Small |

Medium |

| 243 Mbps | 597 Mbps | |

|

500 Tunnels Established |

- |

|

|

1000 Tunnels Established |

- |

526 Mbps |

*Traffic traversing 1 tunnel, remaining tunnels are simply maintained

Call Center Workloads

|

|

Small |

Medium |

| 129 Mbps | 192 Mbps | |

|

500 Tunnels Established |

- |

|

|

1000 Tunnels Established |

- |

163 Mbps |

*Traffic traversing 1 tunnel, remaining tunnels are simply maintained

UCS Sizing Considerations

There is a high performance and a High Capacity model. The only difference between models is the amount of memory.

The high performance model includes 128GB of memory and is targeted for a maximum of 48 Small instances or 24 Medium instances with no oversubscription (since the UCS has 48 physical cores available).

The high capacity model is aimed at deployments that require a smaller headend appliance to terminate small deployments (up to 25 tunnels per vMX instance) with relatively low bandwidth requirements. It includes 384 GB of memory with hyperthreading enabled to support oversubscribing virtual machines (VMs). This supports up to a 3:1 oversubscription ratio for a maximum of 144 Small instances. We do not believe medium instances should be oversubscribed as you will lose a lot of the performance benefits of these sizes at scale and the customer should defer to the high performance model for such deployments.

Reference Platform

|

High Performance |

High Capacity |

|

128 GB Memory |

384 GB Memory |

|

UCS C220 M5 4 x 10GB SFP NIC in LACP bond 1.2TB Storage (in RAID 1) 2 x 24 Core CPU’s (48 physical cores total) |

|

Testing Methodology

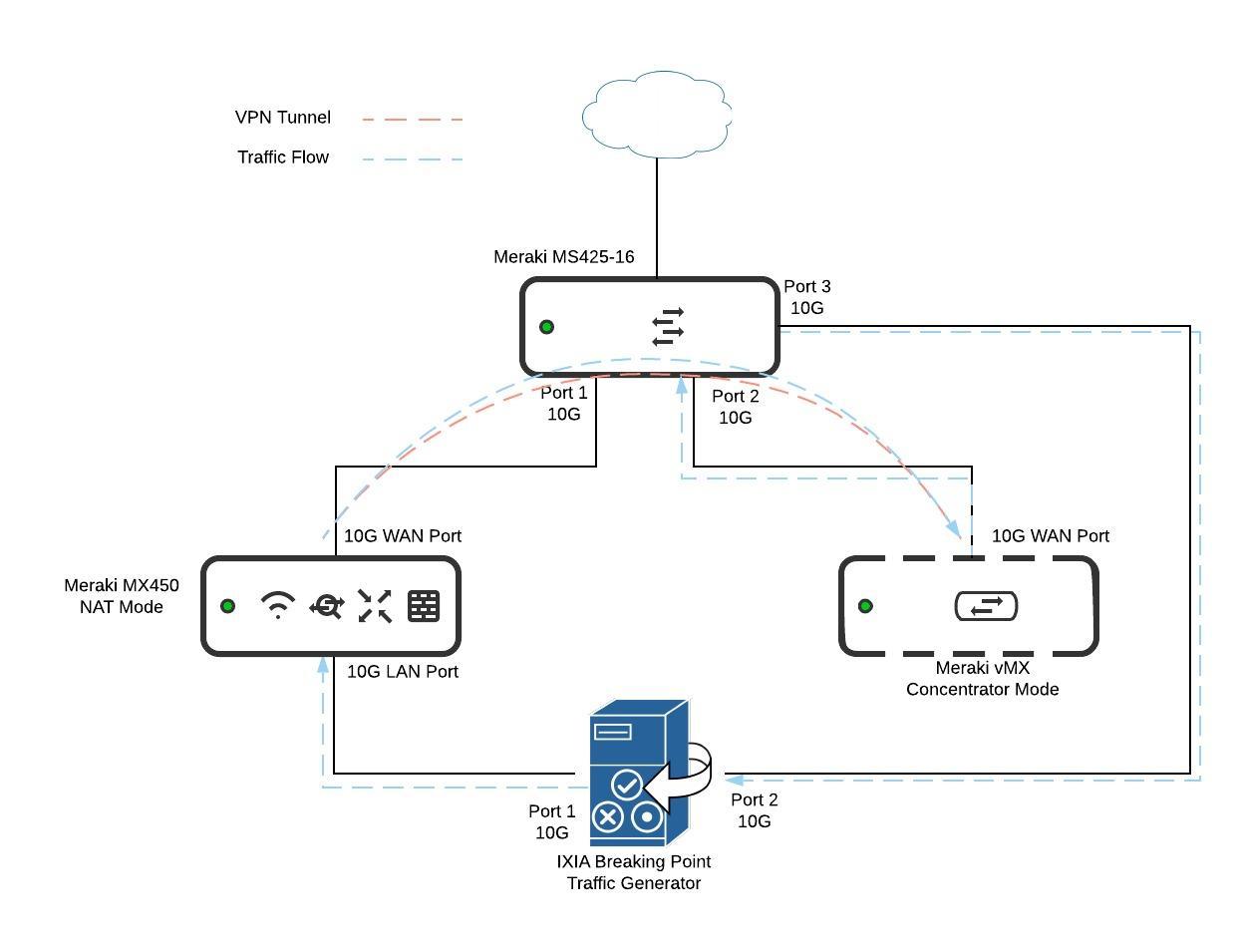

The diagram above represents the testing methodology for the sizing guide numbers quoted in this document. For each use-case above, the IXIA profile used to generate the test can be downloaded to run on your own ixia chassis. For each number displayed, the ixia test report used to obtain that number can also be downloaded.

Requirements

The following devices were used in the deployment:

- 1 x Ixia Breaking Point traffic generator

- 1 x MX450

- 1 x MS425-16

- 1 x Small vMX or Medium vMX running on the above UCS reference compute platform

Design

Ixia Setup

On the Ixia, port 1 is connected to the LAN of the MX450 and port 2 is connected to port 3 of the switch.

Switch Setup

On the switch, port 1 is configured in VLAN1 and port 2/3 are configured as access ports in VLAN 2

MX450 Setup

The MX450 was configured as a VPN spoke in NAT mode with a VPN hub of the vMX we were testing.

vMX Setup

The vMX was configured as a concentrator in VPN hub mode and advertised the subnet of VLAN 2 to the spoke (configured via the local subnets field on the site to site VPN page).

Traffic Flow

In the image above we see that an auto-VPN tunnel is built between the MX450 and vMX across the switch fabric. This is so that all packets between the two devices are switched at the switch level as opposed to going through another gateway device for the cleanest possible test. The blue arrows above provide the flow of traffic as it leaves the ixia towards the MX450 and re-enters back to the ixia on port 2

Ixia Reports

In each of the IXIA reports above you can find the data rate under the Test Results for <test name> > Detail > Application Data Throughput section. The data was sampled every second during the test run. To obtain the throughput number mentioned above, every 10s of data in this section of the report was averaged together and the maximum of those averages was used to determine the throughputs quoted above.