Meraki Campus LAN; Planning, Design Guidelines and Best Practices

Introduction

The Enterprise Campus

The enterprise campus is usually understood as that portion of the computing infrastructure that provides access to network communication services and resources to end-users and devices spread over a single geographic location. It might span a single floor, building or even a large group of buildings spread over an extended geographic area. Some networks will have a single campus that also acts as the core or backbone of the network and provide inter-connectivity between other portions of the overall network. The campus core can often interconnect the campus access, the data centre and WAN portions of the network. In the largest enterprises, there might be multiple campus sites distributed worldwide with each providing both end-user access and local backbone connectivity. From a technical or network engineering perspective, the concept of campus has also been understood to mean the high-speed Layer-2 and Layer-3 Ethernet switching portions of the network outside of the data centre. While all of these definitions or concepts of what a campus network is are still valid, they no longer completely describe the set of capabilities and services that comprise the campus network today.

The campus network, as defined for the purposes of the enterprise design guides, consists of the integrated elements that comprise the set of services used by a group of users and end-station devices that all share the same high-speed switching communications fabric. These include the packet-transport services (both wired and wireless), traffic identification and control (security and application optimization), traffic monitoring and management, and overall systems management and provisioning. These basic functions are implemented in such a way as to provide and directly support the higher-level services provided by the IT organization for use by the end-user community.

This document provides best practices and guidelines when deploying a Campus LAN with Meraki which covers both Wireless and Wired LAN.

Wireless LAN

Planning, Design Guidelines and Best Practices

Planning is key for a successful deployment and aims in collecting/validating the required design aspects for a given solution. The following section takes you through the whole design and planning process for Meraki Wireless LAN. Please pay attention to the key design items and how this will influence the design of WLAN but also other components of your architecture (e.g. LAN, WAN, Security, etc).

Planning Your Deployment

The following points summarizes the design aspects for a typical Wireless LAN that needs to be taken into consideration. Please refer to Meraki documentation for more information about each of the following items.

- Get an estimation of the number of users per AP (Influenced by the AP model, should be the outcome of a coverage survey AND a capacity survey)

- Determine the total number of SSIDs that are required (do not exceed 5 per AP or generally speaking per "air" field such that 802.11 probes of no more than 5 SSIDs compete for airtime)

- For each of your SSIDs, determine their visibility requirements (Open, Hidden, Scheduled, etc) based on your policy and service requirements

- For each of your SSIDs, determine their association requirements (e.g Open, PSK, iPSK, etc) based on the clients' compatibility as well as the network policy

- SSID encryption requirements (e.g WPA1&2, WPA2, WPA3 if supported, etc). Please verify that it's supported on all clients connecting to this SSID and choose the lowest common denominator for a given SSID.

- Client Roaming requirements (if any) per SSID and how that will reflect on your Radio and network settings (e.g. Layer 2 roaming, Layer 3 roaming, 802.11r, OKC, etc). Review recommendations and pay attention to caveats mentioned in the following section.

- Wireless security requirements per SSID (e.g Management Frame Protection, Mandatory DHCP, WIPS, etc)

- Splash pages needed on your SSIDs (e.g. Meraki Splash page, External Captive Portal, etc) and what is the format of your splash page (e.g. Click through, sign-on challenge, etc)

- Splash page customizations (e.g welcome message, company logo, special HTML parameters, etc)

- Is it required to have Active Directory Integration for any of your SSIDs (What is the connectivity to AD server? IP route, VPN ,etc)

- Do you require an integration with a Radius Server (What is the connectivity to Radius server, How many Radius servers, Do you need to proxy Radius traffic, Any special EAP timers, etc, Will CoA be required, Dynamic Group Policy assignment via Radius, Dynamic VLAN assignment via Radius, etc)

- Client IP assignment and DHCP (Which SSID mode is suitable for your needs, which VLAN to tag your SSID traffic, etc)

- If you are tagging SSID traffic, ensure that the Access Point is connected to a trunk switch port and that the required VLANs are allowed

- Do you need to tag Radius and other traffic in a separate VLAN other than the management VLAN? (Refer to Alternate Management Interface)

- What are your traffic shaping requirements per SSID (e.g Per SSID, per Client, per Application)

- What are your QoS requirements per SSID (Per Application settings). Remember, you will need to match this on your Wired network.

- Do you need group policies? (e.g. Per OS group policy, Per Client group policy, etc)

- What are the wired security requirements per SSID (e.g Layer 2 isolation, IPv6 DHCP guard, IPv6 RA guard, L3/7 firewall rules, etc)

- Are you integrating Cisco Umbrella with Meraki MR (Requires a valid LIC-MR-ADV license or follow the manual integration guide)

- How do you want your SSIDs to be broadcasted (e.g. On all APs, specific APs with a tag, all the time, per schedule, etc)

- Is BLE scanning required?

- Is BLE beaconing required (What UUIDs and assignment method)

- What Radio profile best suits your AP(s) (e.g Dual-band, Steering, 2.4GHz off, channel width, Min and Max Power, Bit rate, etc)

- Do you need multiple Radio profiles (e.g. per zone, per AP, per location, etc)

- If you require end-to-end segmentation inclusive of the Wireless edge (Classification, Enforcement, etc.) using Security Group Tags (Requires LIC-MR-ADV license)

- If enabling Adaptive Policy, choose the assignment method (Static vs Dynamic via Radius) and SGT per SSID

- Follow the guidance when configuring your Radius server (e.g. Cisco ISE) to enable dynamic VLAN/Group-Policy/SGT assignment

- For energy saving purposes, consider using SSID scheduling

Design Guidelines and Best Practices

To digest the information presented in the following table, please find the following navigation guide:

- Item: Design element (e.g. Wireless roaming)

- Best Practices: Available options and recommended setup for each (e.g. Bridge mode for seamless roaming)

- Notes: Additional supplementary information to explain how a feature works (e.g. For NAT mode SSID, the AP runs an internal DHCP server)

- Caution/Caveat/Consideration: Things to be aware of when choosing a design option and/or implications to the other network components (e.g. Layer 3 roaming mode requires AP to AP access on port UDP 9358)

Please pay attention to all sections in the below table to ensure that you get the best results of your Wireless LAN design

| Item | Best Practices | Notes | Caution/Caveat/Consideration |

MR AP Management VLAN

|

Dynamic IP Assignment (DHCP) for zero-touch provisioning (untagged traffic to the upstream switch port and then DHCP discover in the configured native VLAN) Static IP Assignment; Either pre-stage AP or provide DHCP and then change settings in dashboard. It is recommended (where possible) to assign a Management VLAN per zone (e.g. Floor, communal area, etc) This is for roaming and client balancing purposes |

This management VLAN must be able to access the public internet. Read more here on the required IPs and Ports

|

For Dynamic IP assignment, make sure the upstream switch port has the correct native VLAN settings. For Static IP assignment, make sure the chosen VLAN is allowed on the upstream switch port. |

|

Use this feature if your Radius, SNMP and Syslog servers are not accessible via the public internet. Ensure that there's IP communication between the alternate management interface VLAN and your server(s) (e.g. Radius, SNMP and Syslog) |

The same Alternate Management Interface VLAN will be used for all APs in the same dashboard network. The Alternate Management Interface IP address can be customized per AP. |

The AP Management VLAN still requires access to the internet regardless of enabling/disabling the Alternate Management Interface The Alternate Management Interface VLAN must be enabled on the upstream switch port which has to be configured in trunk mode |

|

Number of Users per AP |

Perform a coverage survey AND a capacity survey Determine the number of devices per user and their types |

Influences the model and quantity of your Access Points | Narrow band (e.g. 20Mhz) for higher density deployments |

Total Number of SSIDs |

Collapse to fewer SSIDs (Do not exceed 5 per AP competing for the same airtime, best practice is to stay within 3 SSIDs) | Leverage Dynamic VLAN assignment (Requires an external Radius server such as Cisco ISE) | More SSIDs will lead to higher airtime overhead. Other considerations here |

SSID Visibility Requirements (e.g. Public, Hidden, Scheduled, etc) |

Hidden or Public (your choice) but consider zoning them to specific APs. This can add extra work for IT administrators because they will have to go to each machine and manually configure the SSID, rather than telling users which network to connect to and the password.

|

Leverage AP tags to create SSID broadcasting zones

|

SSIDs should be hidden if they are not meant to be publically broadcast (e.g. New brand, site, etc) Please note that hidden SSIDs will still be broadcasting 802.11 probes and thus is not considered to add any security benefit |

SSID Association Requirements (e.g. Open, PSK, iPSK, etc) |

Each SSID should be serving a use case (Corp users, Contractors, IoT, etc) rather than a network segment | Leverage Cisco ISE for a granular experience per user per SSID | All clients must support the selected option (e.g. |

SSID Encryption Requirements (e.g WPA1&2, WPA2, WPA3, etc) |

Choose the most secure but lowest common denominator for a given SSID | Always refer to firmware changelog | Please verify that it's supported on all clients connecting to this SSID |

Client Roaming Requirements per SSID (Layer 2, Layer 3, Layer 3 with concentrator)

|

Layer 2 roaming with Bridge mode offers the most seamless roaming experience when using 802.11r (aka FT) Layer 2 roaming can be achieved using Bridge mode SSIDs It is recommended to limit roaming to a specific zone, e.g. a floor plan (to reduce your broadcast domain) |

Layer 2 roaming requires that all APs in a roaming zone have access to the client's VLAN All APs within a roaming zone must be in the same dashboard network |

Radio settings; aim for 15-25 dB SNR Caution with your VLAN size (larger VLANs lead to larger broadcast domain) Do not prune the client's VLAN on any of the switch ports where the participating APs are connected Wi |

Layer 3 Roaming with 802.11r & OKC |

Use Layer 3 roaming with concentrator and enable Fast reconnect on client

|

Refer to Layer 3 roaming best practices

|

Requires IP communication with Meraki Registry (Source port range UDP 32768-61000 and destination port UDP 9350-9381) Requires IP communication with concentrator to establish tunnels (Source/Destination port range UDP 32768-61000) Please ensure proper IP communication on all required ports. Also, pay attention to the concentrator deployment mode (i.e. Routed mode vs One-armed mode) as this will influence the IP address of packets that are breaking out centrally (e.g. DHCP, DNS, Radius, etc). Read more here |

Layer 3 Roaming with PSK |

User layer 3 roaming (aka distributed roaming) | Please refer to Layer 3 roaming best practices | APs will exchange roaming database using port UDP 9358 |

Management Frame Protection (802.11w) |

Choose Enabled option if not supported on all clients connecting to this SSID | Applies to robust management frames | Required option will prevent clients that do not support PMF from joining this SSID |

Mandatory DHCP |

Mandatory DHCP enforces that clients connecting to this SSID must use the IP address assigned by the DHCP server. Clients who use a static IP address won’t be able to associate. Choose Enable Mandatory DHCP if this is part of your security requirements. |

The default setting for this feature is disabled. Wireless clients configured with static IPs are not required to request a DHCP address.

|

All 802.11ac Wave 2 capable MR access points running MR 26.0 firmware or later support this feature.

|

WIPS (aka Air Marshal) |

Air Marshal mode allows network administrators to design an airtight network architecture that provides an industry-leading WIPS platform in order to completely protect the airspace from wireless attacks such as:

You specify whether or not clients are able to connect to rogue SSIDs. If you select block clients from connecting to rogue SSIDs by default, then devices will be automatically contained when attempting to connect to an SSID being broadcast by non-Meraki AP seen on the wired LAN. This provides greatest level of security for the wireless network. |

On MRs with a scanning radio, Air Marshal will not contain Rogue and Other SSIDs seen by the scanning radio if those SSIDs are on a DFS channel. However, if the client-serving radio of the MR is operating on a DFS channel that matches that of the Rogue or Other SSIDs, Air Marshal will contain those SSIDs on that DFS channel if configured to do so. |

6 GHz containment will not work because 6 GHz uses protected management frame and it would not be possible to contain the clients over the air |

Splash Page (None, Click-through, Sign-on, etc) |

Splash page frequency to control the user admission on the network |

Some clients (e.g. Printers) won't support Splash pages Where applicable, Radius traffic will be sourced from the Meraki dashboard (not the AP). Check the full traffic flow here |

|

Splash Page Customization |

If you require a fully customized experience, it is recommended to deploy an external captive portal and leverage the EXCAP API. | Check this list for allowed HTML tags and attributes | |

Active Directory Integration |

Ensure IP communication path to your Active Directory server(s). It is recommended to access the Active Directory server via VPN as the traffic is not encrypted | Ports used: 3268, 389 | Please note that traffic is sent unencrypted |

Radius Server Integration |

Ensure IP communication path to your Radius server(s). It is recommended to access the Radius server via VPN as the traffic is hashed using the Radius secret EAP timers should match those on your Radius server. Where applicable, Group policy can be sent back in a Radius attribute (e.g. Filter-Id) as a String. Group Policies can also assign VLANs to wireless users Where applicable, VLAN tag can be sent back in a Radius attribute (Tunnel-Private-Group-ID) with Tunnel-Type (VLAN), and Tunnel-Medium-Type (IEEE-802) attributes in the Access-Accept message IF dashboard is configured with “RADIUS response can override VLAN tag" Where applicable, CoA (Change of Authorization) can be used to adjust an active client session. CoA is a RADIUS code 43 frame. |

If your deployment uses CoA ensure you enable Cisco ISE even if ISE is not used, otherwise audit-session-id is not included and the CoA exchange may not work. |

CoA is only supported on the following SSID modes: NAT mode, Bridge mode, Layer 3 Roaming, Layer 3 Roaming with a Concentrator, VPN CoA is not supported on MR Repeaters.

For CoA with Splash page, Radius server sends CoA messages to Meraki's FQDN on port UDP 799 With MR26+, the client won't be disconnected (i.e. No new DHCP request) Cloud Radius Proxy does not support CoA |

|

Please ensure that:

For Fail-Closed (i.e. Only clients that previously successfully authentication and verified with LDAP to be authorized on the Wireless network), Choose the LDAP option set to Verify Certificate CN with LDAP. For Fail-Open, leave the LDAP option set to Do not verify certificate with LDAP. (please note that in this case, any wireless device that presents a valid certificate will be able to connect to the SSID regardless of the permissions set for that device/user) If you wish to check if the certificate presented to the MR is revoked, set OCSP to Verify Certificate with OCSP and set the OCSP Responder URL the MR can use to check the certificate against. |

MR uses the certificate signed by QuoVadis CA (before March 23rd 2021) and IdenTrust Commercial Root CA 1 (after March 23rd 2021) and the client uses the certificate signed by their own CA Password supported types:

Certificate supported types:

Multiple LDAP servers can be used for different SSIDs For more information about Root CAs and other related info, please refer to this documentation article. |

Alternative Management Interface can be used to communicate with the LDAP server only. It cannot be used to communicate with the OCSP server With this auth method, an external RADIUS server is not involved in this process and is not needed. The RADIUS server on the MR will handle 802.1X authentication instead MR27+ firmware is not supported on all MR models. Please refer to the to Product Firmware Version Restrictions EAP-MSCHAPv2 is not supported with Meraki Local Auth Currently, the MR access point cannot check if a user’s account is locked or disabled in the Active Directory. In addition, it’s not possible to “pre-cache” specific clients only. By default, all clients will be cached. A maximum of one LDAP server is currently supported. Multiple LDAP servers can be used for different SSIDs Secure LDAP (LDAPS) is not currently supported If the Verify Certificate CN with LDAP option is set only clients that previously successfully authenticated and verified with the LDAP server to be authorized for wireless access will be able to connect to the SSID (i.e. any clients that have not been successfully authenticated and, therefore, not cached will be denied access to the SSID if the LDAP server is down) |

|

|

Use NAT mode SSID if your client(s) only require access to the Internet Use NAT mode SSID if your network does not have a DHCP server Use NAT mode SSID if you network has a DHCP server but without enough address space |

In NAT mode, Meraki APs run as DHCP servers to assign IP addresses to wireless clients out of a private 10.x.x.x IP address pool behind a NAT. By default, client(s) will use the same DNS handed out to the AP for DNS queries. This can be Customized to use specific DNS server(s) if required. |

Do not use NAT mode SSID if your client(s) require access to local wired or wireless resources All clients will be assigned with an IP address in the range 10.0.0.0/8 (This cannot be customized) All client traffic will be NAT'd to the AP's management IP and sent untagged to the upstream switch port VLAN tagging is not supported in NAT mode SSID No communication between wired and wireless clients Layer 2 discovery protocols (e.g. LLDP) cannot be used to discover hosts on the wired or wireless network Do not use NAT mode SSID if your client(s) do not support NAT Traversal (e.g. VPN clients) Roaming is not supported in NAT mode SSID |

|

|

It is recommended to use a default VLAN tag on a bridge mode SSID to avoid traffic being tagged in the AP's management VLAN Use Bridge mode if wired and wireless clients in the network need to reach each other (e.g. Mobile phone needs to access a wired printer) Use Bridge mode if multicast/broadcast traffic is required to flow between wireless and wired Use Bridge mode if your client(s) do not support NAT Traversal Use Bridge mode if you need to tag your SSID in a specific VLAN (static or dynamic) Use Bridge mode if you're using IPv6. Check IPv6 bridging for more information Bridge mode is recommended for seamless roaming across Meraki MR Access Points (Within the same layer 2 domain, i.e. same VLAN). Leverage Bridge mode VLAN tagging via AP tag to group APs together into a roaming zone; e.g. a floor plan (For example, All APs in lobby area tagged with "Lobby_AP" and APs in Sales area tagged with "Sales_AP" then the Bridge SSID can tag traffic in VLAN 10 for AP tagged with Lobby_AP and in VLAN 20 for AP tagged with Sales_AP). This will provide the best experience for seamless roaming with 802.11r and OKC as users roaming across a roaming zone will not require a new DHCP request. Avoid using large VLANs (e.g. /16) as this will create a larger broadcast domain. Instead, assign a VLAN per zone (e.g. floor plan) |

If no VLAN tag is specified for the SSID, traffic will be sent untagged to the upstream switch port. It is most likely that it will be configured with the management VLAN as the native VLAN. This is the VLAN that will be used to tag the SSID traffic in this case. VLANs assigned via Group Policies supercedes those configured on the Acess control Page. Group policies can either be assigned statically to clients or dynamically via a Radius attribute |

Adult Content Filtering not supported on Bridge mode Remember to allow the whole range of tagged VLANs (static AND dynamic) on the upstream switch port(s) where your AP is connected (i.e. upstream switch ports must be configured in trunk mode) |

|

Layer 3 Roaming Mode SSID (aka Distributed Layer 3 Roaming) |

Ensure that all APs in a roaming zone can communicate with each other using port UDP 9358 and using ICMP. Distributed layer 3 roaming is best for large networks with a large number of mobile clients. It is recommended to use the "Test" button on dashboard after any change done on your switches Choose distributed layer 3 roaming over Layer 3 roaming w/ concentrator if:

|

A client's anchor AP will timeout after the client has left the network for 30 seconds APs will attempt to do a broadcast domain mapping by sending broadcast frames of type 0x0a89 sent every 150 seconds. Repeaters don’t have their own IP address, so they cannot be anchor APs. When a client connects to a repeater, the repeater becomes the client’s hosting AP, and the repeater assigns its gateway as the client’s anchor AP.

|

Fast roaming protocols such as OKC and 802.11r are not currently supported with distributed layer 3 roaming. The best roaming performance will be using Layer 2 roaming with 802.11r. All APs in a roaming zone must be in the same dashboard network (but can be in any network segment as long as they can communicate using IP and port UDP 9358) A client's anchor AP will timeout after the client has left the network for 30 seconds. An AP could theoretically broadcast BCD announcement packets to all 4095 potentially attached VLANs, however it will limit itself to the VLANs outlined below:

With Wireless Meshing; Repeaters don’t have their own IP address, so they cannot be anchor APs. When a client connects to a repeater, the repeater becomes the client’s hosting AP, and the repeater assigns its gateway as the client’s anchor AP. |

|

Ensure that all APs in a roaming zone can communicate with Meraki Key registry on destination port UDP 9350-9381 (source port UDP 32768-61000) - Check traffic flow here Ensure that all APs in a roaming zone can communicate with their concentrator on destination port UDP 32768-61000 (source port UDP 32768-61000) - Check traffic flow here It is recommended to have concentrator resiliency with one of the following options:

It is recommended to use the same concentrator mode on ALL used concentrators (do not mix Routed and One-armed modes even for geo-resilient concentrators) In case of using Warm-spare concentrators, it is recommended to use an upstream VIP (virtual IP) to minimize disruption during tunnel failover Refer to the latest MX sizing guide for your concentrator(s) Choose Layer 3 roaming w/ concentrator over distributed layer 3 roaming if:

|

Each configured SSID will form a Layer 2 VPN tunnel to it's concentrator(s) (maximum two) With warm-spare concentrators, tunnels only form to the active unit (either to the physical assigned IP address to it's uplink OR to the virtual IP) Both Routed and One-armed Concentrator modes supported (More info here) Both MX and vMX concentrators can be used. With Routed mode concentrator:

With One-armed concentrator:

|

Sizing:

Resiliency:

Warm-spare design notes: With warm-spare concentrators, the two units need to exchange VRRP packets as follows:

Routed mode concentrator design notes:

One-armed mode concentrator design notes:

|

|

|

Recommended for small branch offices, teleworker or executive home offices, temporary site offices (eg. construction sites) with no more than 5 users per AP Where possible (e.g. In case of an onsite NGF such as Meraki MX Security and SD-WAN appliance), configure split tunneling as opposed to full tunneling for better network performance. Ensure that all APs in a roaming zone can communicate with Meraki Key registry on destination port UDP 9350-9381 (source port UDP 32768-61000) - Check traffic flow here Ensure that all APs in a roaming zone can communicate with their concentrator on destination port UDP 32768-61000 (source port UDP 32768-61000) - Check traffic flow here It is recommended to have concentrator resiliency with one of the following options:

It is recommended to use the same concentrator mode on ALL used concentrators (do not mix Routed and One-armed modes even for geo-resilient concentrators) In case of using Warm-spare concentrators, it is recommended to use an upstream VIP (virtual IP) to minimize disruption during tunnel failover Refer to the latest MX sizing guide for your concentrator(s) |

Each configured SSID will form a Layer 2 VPN tunnel to it's concentrator(s) (maximum two) With warm-spare concentrators, tunnels only form to the active unit (either to the physical assigned IP address to it's uplink OR to the virtual IP) Both Routed and One-armed Concentrator modes supported (More info here) Both MX and vMX concentrators can be used. With Routed mode concentrator:

With One-armed concentrator:

|

Sizing:

Resiliency:

Warm-spare design notes: With warm-spare concentrators, the two units need to exchange VRRP packets as follows:

Routed mode concentrator design notes:

One-armed mode concentrator design notes:

|

|

SSID Traffic Shaping |

It is recommended to apply traffic shaping settings on the access edge (i.e. on your Wireless APs) 5 Mbps is a good recommendation for per-client bandwidth limit in high-density environment (You can override this limit for specific devices and applications) Consider using SpeedBurst where applicable (enables bursts of four times the allotted bandwidth limit for five seconds) Dashboard offers default shaping rules which could be sufficient. Otherwise, you can create your own rules. (Rules are processed in top-bottom order) Traffic can be shaped based on:

WMM and QoS settings (e.g. DSCP, CoS) can be applied on:

FastLane can enhance the network performance by assigning wireless profiles to your clients Refer to this table for downstream QoS mappings and ensure this is consistent with your Access switch(s) settings. Also ensure that switchports used as uplinks for MRs are set to trust DSCP |

Traffic shaping can be applied using the following options:

Since traffic shaping can be set in different ways, dashboard will use the following order of policy (lowest to highest):

|

Traffic shaping is per flow (e.g. 5Mbps limit for Webex is applied per flow, so each client will be limited to 5Mbps Webex traffic) Traffic shaping is not for limiting the wireless data rate of the client but the actual bandwidth as the traffic is bridged to the wired infrastructure. MR APs will automatically perform a multicast-to-unicast packet conversion using the IGMP protocol. The unicast frames are then sent at the client negotiated data rates rather than the minimum mandatory data rates, ensuring high-quality video transmission to large numbers of clients. This can be especially valuable in instances such as classrooms, where multiple students may be watching a high-definition video as part of a classroom learning experience. MR APs will automatically limit duplicate broadcasts, protecting the network from broadcast storms. The MR access point will limit the number of broadcasts to prevent broadcasts from taking up air-time. This also improves the battery life on mobile devices by reducing the amount of traffic they must process. |

SSID Firewall Rules

|

L3/L7 firewall rules can be used to filter outgoing traffic Use Deny access to local LAN to prevent clients from accessing any LAN (RFC 1918) resources (DNS & DHCP are exempt) Use layer 2 isolation (bridge mode only) to prevent Wireless Clients communicating to each other IPv6 DHCP guard and IPv6 RA guard can be applied with MR28.1+ |

Layer 3 firewall rules on the MR are stateless and can be based on destination address and port Layer 7 firewall rules can either be category based or Application based NBAR is supported on WiFi6 Access Points with MR27.1+. Check firmware compatibility with your APs here Firewall Rules can be applied using the following options:

DHCP Guard has the following options:

Router Advertisement (RA) Guard has the following options:

|

MRs can have a maximum of 1800 firewall rules NBAR support requires the following:

Deny access to local LAN and layer 2 isolation (bridge mode only) Can only be applied on the global SSID settings (i.e. not configurable via a Group policy) |

Umbrella Integration |

There are two options for Umbrella integration:

|

When a Meraki SSID is initially linked, it will inherit the default Umbrella Policy, which will be the last policy in Umbrella's ordered list. Once a policy is assigned to a network device (SSID/group policy) in the Umbrella dashboard, any policies below the one selected for the network device will not be checked against. The policy list in Umbrella is read in a top-down order and once a match is found for the device ID, no other policies will be evaluated. More information can be found in Cisco Umbrella's Policy Precedence documentation. |

Cisco Umbrella has two potential endpoints that Meraki will send DNS traffic to: 208.67.222.222/32 and 208.67.220.220/32. Make sure that bi-directional UDP 443 to both of these addresses is allowed on any upstream devices. DNS exclusion is only available for SSIDs configured in Bridge Mode. For HTTPs blocking, blocked requests for HTTPS content will not load the Umbrella blocked page correctly. Instead, users will be presented with a generic "Webpage is not available" error.

|

Radio Planning |

Site Surveys:

For ceiling mounted APs:

For wall mounted APs:

Frequency Bands:

Radio Management:

DFS:

Antennas:

Please ensure that at any given time, channel utilization is kept below 50% as this will impact your network performance. There are important factors; 1) Utilization value and 2) Over how much time (i.e. is this consistent or just a burst) |

Radio samples are sent from Meraki APs to the cloud every second. With AutoRF, TX power can be reduced by 1-3 dB per iteration and is increased in 1 dB iterations For 2.4 GHz, Auto Power reduction algorithm allows TX power to go down only up to 5 dBm. For 5 GHz, Auto Power reduction algorithm allows TX power to go down only up to 8 dBm (If lower TX power is needed, APs can be statically set to lower power) AutoRF will only switch channels if there is high utilization and that there is a better channel available (Process runs every 15mins) Dashboard refers to 2.4Ghz Radio as radio: 0 and 5Ghz as radio: 1 Dashboard refers to the first configured SSID as vap: 0 and then each subsequent SSID as vap: x (you can configure up to 15 SSIDs on dashboard) CSAs will be used to move clients from one 20 Mhz or 40 Mhz channel to another (Does not apply to 80Mhz channels) When a DFS event occurs, all the APs in a network will switch to the next best channel (disconnecting all clients on DFS channels) If the non-802.11 utilization on one of the client-serving radios is 65% or greater for one minute, the dashboard will instruct the AP to change to a different channel. Client Balancing information is exchanged between APs on the LAN via Broadcast frames using UDP port 61111 Refer to this document to better understand signal propagation Understanding EIRP: EIRPWithout beamforming = transmit strength (dBm) + antenna gain (dBi) – cable loss (dB) For example; if you an AP's output power is 21dBm, the cable loss is 1dB, and the max EIRP for the band is 1W or 30dB, an antenna with a gain of up to 10dBi can be used within the legal maximum EIRP. EIRPWith beam orming = transmit strength (dBm) + antenna gain (dBi) + beamforming gain (3db) – cable loss (dB)

|

MR APs can be used in site survey mode A maximum of 50 RF profiles can be defined. When an AP is being deployed initially, it uses the default channels of 1 for 2.4 GHz and 36 for 5 GHz and the transmit power is set to Auto. These settings can be manually configured on the dashboard per AP before deploying wireless setup. Some legacy clients do not inspect CSAs (Please contact Meraki support to disable AutoChannel feature) Gateways serving active Meshed Repeaters will not change channels Ensure that APs participating in Client Balancing are on the same broadcast domain (e.g. a floor or a building may all share a common management VLAN) MR APs that do not support 80 MHz-wide channels will default to 40 MHz for their channel width RX-SoP is available only on Meraki 802.11 ac Wave 2 APs and higher After RF profiles have been assigned, TX power and channel assignment changes might take up to 60 minutes to reflect changes as calculations are done through Meraki dashboard Refer to the hardware install guides for guidance on antenna selection. Two identical antennas are necessary if using a 2-port antenna on the MR84 Please note that Radios on newer generations of MR access points (e.g. WiFi-6) have a higher sensitivity compared to older generations (e.g. WiFi-5 Wave 2) which might result in a higher number of DFS events detected by newer APs The Meraki Cloud assigns the appropriate regulatory domain information to each Meraki Access Point. Which bands and channels that are available for a particular Meraki Access Point depends on the model, indoor/outdoor operation, and the regulatory domain. Since the Meraki Cloud enforces the wireless regulations, the channel list on the Radio Settings Page will show the channels the access point is certified to use. For more specific certification and band information, please refer to the install guides and the regulatory information pamphlet included within the AP's original packaging |

|

We recommend that you perform a radio site survey before installing the equipment (involves temporarily setting up mesh links and taking measurements to determine whether your antenna calculations are accurate) It is generally recommended to allow auto-channel selection in networks with repeaters. However, each use case must lend itself to the specific requirements (antenna selection, frequency band, etc) Minimize the number of hops between Repeaters and Gateways (ideally single hop) Maximize the number of Gateways available in the vicinity of your Repeater (plan for no more than two repeater access points attached to each gateway access point - and - it is recommended that each mesh access point has at least three strong neighbors) Although it is not recommended to use manual channel selection for meshing, if you really need to do then it is advised to change on all Repeaters first then changing it on the Gateway. Use AP tags to disable un-desired SSIDs on a Repeater (By default the Repeater will broadcast all SSIDs of its Gateway) It is recommended to stage access points before deploying to ensure that they update to the latest firmware and download the proper configuration from the Meraki Dashboard. Once deployed, firmware and configuration updates may occur over the mesh network. Indoor Meshing Best Practices: Outdoor Meshing Best Practices:

|

Meraki APs will attempt to form a Meshing link if:

Meraki Repeater APs will search for a new Gateway if:

Data traffic over a Wireless Mesh link is encrypted using the Advanced Encryption Standard (AES) algorithm. Meraki APs in Repeater mode will automatically choose a gateway with the best mesh metric which is calculated based on the received mesh probes. Meraki APs in Gateway mode will broadcast mesh probes (Broadcast frames with different bit rates and varying sizes every 15 seconds on both 2.4 and 5 GHz) Once the AP goes in the mesh mode, The AP scans all channels to collect info from all neighbors. If a valid neighbor (in-network AP or Meraki AP) is found, it goes to that channel. The configured channel has higher precedence if a valid neighbor is found on it. If no valid neighbor is found at all from all channels, it stays on the configured channel.

While it is not possible to select which frequency band should be used for meshing, it is possible to manually adjust channel selections to direct the AP toward a desired behavior. To do this, refer to the article on manually changing channels in a mesh network. If it is desired for two APs to mesh on 5Ghz as opposed to 2.4Ghz, then the APs should both be set to the same 5Ghz channel, but different 2.4Ghz channels. Keep in mind though that a frequency band cannot be allocated specifically for meshing, and both bands will still be available for servicing clients unless the SSID is configured to use the 5Ghz band only. |

Only Cisco Meraki APs can function as repeaters and gateways. Wireless MX security appliances, Z-Series teleworker gateways, and third-party APs cannot participate in a wireless mesh. Wireless Meshing utilizes both bands. (A frequency band cannot be allocated specifically for meshing) Only way to force meshing on 5 GHz is to disable the 2.4GHz radio A Repeater meshing to a Meraki AP Gateway in the same dashboard network will use its gateway to send both control plane and data plane traffic. A Repeater meshing to a Meraki AP Gateway that does not belong to the same dashboard network will use its gateway to send only control plane traffic. It is not possible to configure a static IP address for a repeater AP; doing so will automatically designate the device as a gateway instead of a repeater. Wireless meshing reduces throughput by approximately 50%, per hop (i.e. APs in its way to reach a valid gateway) It is not recommended to disable meshing to account for unexpected failures on the wired network Environmental factors, like moisture and wind affect performance and link quality Make sure the AP is grounded properly to prevent static buildup and to protect against other electrical issues The Meraki PoE Injector must be deployed indoors as it is not waterproof or ruggedized Conform with the device's listed operating temperature (Refer to datasheets) |

|

|

Bluetooth scanning enhances location analytics by giving you a holistic view of both 802.11 and Bluetooth clients Bluetooth beaconning is useful for client engagement use cases Best practices related to Meraki MT sensors:

|

For Meraki MT sensors, only MR WiFi6 and Wifi 5 Wave 2 access points can be used as gateway

|

||

|

It is recommended to configure a per-client limit to ensure that real time applications do not fight for bandwidth on the SSID It is recommended to configure traffic shaping rules (either default or custom) for real-time applications It is recommended to configure your upstream switches (e.g. Meraki MS product family) to trust incoming DSCP values from Meraki access points If your SSID is configured in Layer 3 roaming w/ concentrator mode, it is recommended that the traffic is terminated on a Voice VLAN on the far end and that you ensure that QoS is configured appropriately at the far-end Follow these steps as VoWiFi best practices:

|

For other product-specific recommendations, please refer to this guide This FAQ guide can be useful to troubleshoot VoWiFi issues. |

Do not use NAT mode for VoWiFi Do not use Layer 3 Roaming mode for VoWiFi if you require 802.11r and OKC for your VoIP devices Do not use Layer 3 Roaming w/ concentrator if you do not wish for VoIP traffic to be tunneled to a central concentrator Setting the minimum bit rate to 12Mbps or higher will not support 802.11b legacy clients |

|

|

With MR24.5+ MR access points use either LLDP or CDP to negotiate power level. Hence, please make sure the upstream switch is configured to negotiate power level with LLDP or CDP Avoid running in low power mode as this will shut both the scanning Radio (which disables WIPS and AutoRF) as well as the Bluetooth Radio (which disables MT connectivity and BLE scanning and advertisements) Indoor Access Points can be powered by either:

Outdoor Access Points can be powered by either:

For energy saving purposes, consider using SSID scheduling and MS Port Schedules (where applicable) |

Full details on lower power mode can be found here

|

While in low power mode, the MR will disable its Air Marshal radio as well as one out of three transmit streams on the 2.4 GHz band (leaving two transmit streams still operating) In comparison, models without the dedicated scanning radio, such as the MR12/16/20/24/70, scan the whole spectrum every 2 hours when there are no clients associated. When low power mode is enabled the scanning radio function becomes disabled. Thus, not only are there no channel_scan events but AutoRF channel assignment can be negatively impacted. |

|

Adaptive Policy (aka AdP) |

Adaptive policy requires MR-ADV license which is only supported in PDL license model When deploying a combined network, please ensure that all devices (e.g. MR, MS390, MX) support AdP (HW and SW) To enable Radius based tagging (e.g. Cisco ISE), please ensure that the RADIUS attribute value pair (av-pair) uses the Cisco SGT AV-Pair presented in HEX value (e.g. cisco-av-pair:cts:security-group-tag=0fa0-00). This example sends back an SGT of 4000 |

AdP is supported with:

In a hybrid architecture (e.g. with Cisco Catalyst as Core), please refer to this configuration guide To enable CMD tagging on an upstream TrustSec capable switch, please to IOS-XE Trunk Port Configuration To sync Adaptive Policy between Dashboard and Cisco ISE, refer to this tool. |

Tagging is only supported in NAT and Bridge mode If the upstream switch is Meraki MS and the switch port where the MR is connected is not configured with Peer SGT capable, the MR will disable tagging for that AP. The same thing will happen if the upstream switch (regardless of its make or model) does not send Cisco MetaData (CMD) encapsulated traffic for a set number of frames. This is known as fail-safe (AP will completely disable tagging until tagging is enabled on the connected switch and encapsulation is observed on the incoming frames) The RADIUS assignment of group tags is done per-session and to operate will require the av-pair in every access-accept for the client. Please note that you CAN apply a default tag to the SSID and override it with a RADIUS response. |

|

SecureConnect automates the process of securely provisioning Meraki MR Access Points when directly connected to switch-ports on Meraki MS Switches, without the requirement of a per-port configuration on the switch

SecureConnect-capable MR access point connected to an MS switch enabled for SecureConnect should not be configured with LAN IP VLAN number |

Supported APs will start off only being able to reach dashboard on the switch management VLAN. The APs will have 3 attempts of 5 seconds each to authenticate. If this authentication fails, the switch's port will fall into a restricted state. This might show itself in a couple of ways:

|

The failed authentication can happen if the AP and switch are in different organizations, or if the AP is not claimed in inventory. Supported on (with 27.6) MR20, MR30H, MR33, MR42, MR42E, MR52, MR53, MR53E, MR70, MR74, MR84, MR45, MR55, MR36, MR46, MR46E, MR56, MR76, MR86 If you have a MR44 or MR46 please contact Meraki support to check for supportability For further information on supported MS switch models, please check here |

Wired LAN

Introduction

A traditional Campus LAN Solution will reflect a hierarchical architecture with the following layers:

- Access Layer

- Distribution Layer

- Core Layer

When designing your Wired Campus LAN, it is recommended to start planning in a bottom-up approach (i.e. start at the Access Layer and go upwards). This will simplify the design process and ensure that you have taken into account the design requirements from an end to end perspective. As always, the design process should be done in iterations revising each stage and refining the design elements until the desired outcome can be achieved.

Here's an explanation of each layer in details and what design aspects should be considered for each:

Access Layer

The access layer is the first tier or edge of the campus. It is the place where end devices (PCs, printers, cameras, and the like) attach to the wired portion of the campus network. It is also the place where devices that extend the network out one more level are attached—IP phones and wireless access points (APs) being the prime two key examples of devices that extend the connectivity out one more layer from the actual campus access switch. The wide variety of possible types of devices that can connect and the various services and dynamic configuration mechanisms that are necessary, make the access layer one of the most feature-rich parts of the campus network.

Distribution Layer

The distribution layer in the campus design has a unique role in that it acts as a services and control boundary between the access and the core. It's important for the distribution layer to provide the aggregation, policy control and isolation demarcation point between the campus distribution building block and the rest of the network. It defines a summarization boundary for network control plane protocols (OSPF, Spanning Tree) and serves as the policy boundary between the devices and data flows within the access-distribution block and the rest of the network. In providing all these functions the distribution layer participates in both the access-distribution block and the core. As a result, the configuration choices for features in the distribution layer are often determined by the requirements of the access layer or the core layer, or by the need to act as an interface to both.

Core Layer

The campus core is in some ways the simplest yet most critical part of the campus. It provides a very limited set of services but yet must operate as a non-stop 7x24x365 service. The key design objectives for the campus core must also permit the occasional, but necessary, hardware and software upgrade/change to be made without disrupting any network applications. The core of the network should not implement any complex policy services, nor should it have any directly attached user/server connections. The core should also have the minimal control plane configuration combined with highly available devices configured with the correct amount of physical redundancy to provide for this non-stop service capability.

The following table compares between the main functions and design aspects of the three campus layers:

| Access Layer | Distribution Layer | Core Layer | |

|

Main Function

|

|

|

|

|

Design Aspects

|

* Please remember to factor for both the total power budget required per switch AND the power standard(s) required |

|

|

Collapsed Core Layer

One question that must be answered when developing a campus design is this: Is a distinct core layer required? In those environments where the campus is contained within a single building—or multiple adjacent buildings with the appropriate amount of fiber—it is possible to collapse the core into the two distribution switches.

It is important to consider that in any campus design even those that can physically be built with a collapsed distribution core that the primary purpose of the core is to provide fault isolation and backbone connectivity. Isolating the distribution and core into two separate modules creates a clean delineation for change control between activities affecting end stations (laptops, phones, and printers) and those that affect the data center, WAN or other parts of the network. A core layer also provides for flexibility for adapting the campus design to meet physical cabling and geographical challenges.

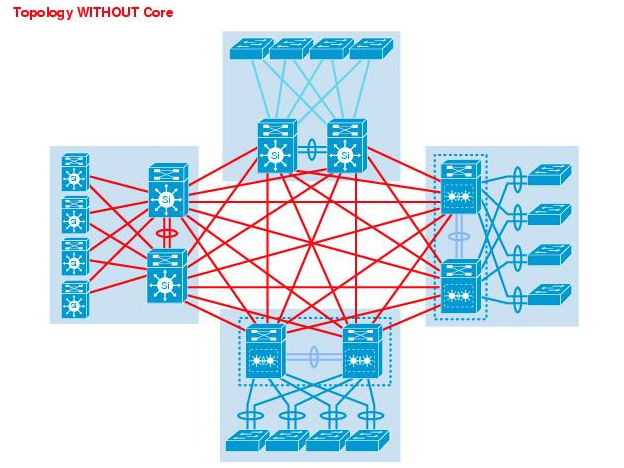

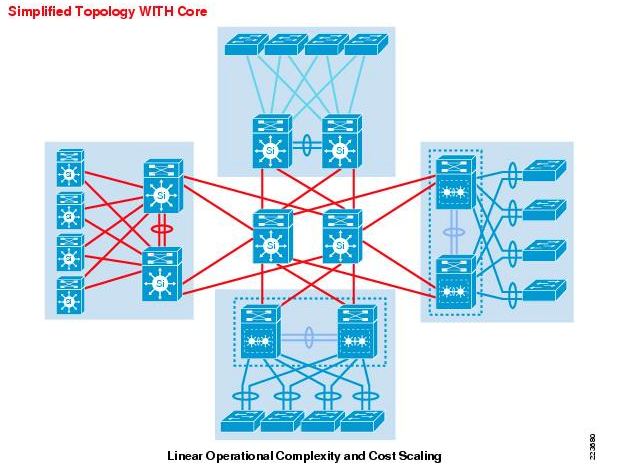

To illustrate the differences between having a Core layer and a Collapsed Core layer and how that relates to scalability, please see the following two diagrams:

Topology without Core Layer

Topology with Core Layer

Having a dedicated core layer allows the campus to accommodate this growth without compromising the design of the distribution blocks, the data center, and the rest of the network. This is particularly important as the size of the campus grows either in number of distribution blocks, geographical area or complexity. In a larger, more complex campus, the core provides the capacity and scaling capability for the campus as a whole.

The question of when a separate physical core is necessary depends on multiple factors. The ability of a distinct core to allow the campus to solve physical design challenges is important. However, it should be remembered that a key purpose of having a distinct campus core is to provide scalability and to minimize the risk from (and simplify) moves, adds, and changes in the campus. In general, a network that requires routine configuration changes to the core devices does not yet have the appropriate degree of design modularisation. As the network increases in size or complexity and changes begin to affect the core devices, it often points out design reasons for physically separating the core and distribution functions into different physical devices.

As a general rule of thumb, if your Distribution Layer is more than one stack (or two distribution units); it is recommended to introduce a dedicated Core Layer to interconnect the Distribution Layer and all the other network components

It's recommended to follow a collapsed core approach if you're distribution layer is:

- A single switch (No HSRP/VRRP/GLBP needed in this case)

- A stack of MS switches (No VRRP/warm-spare needed in this case)

- A pair of MS switches (Enable routing on access layer if possible, more guidance given in the below sections, otherwise enable VRRP/Warm-spare)

- Two stacks of Cisco catalyst switches (e.g. C9500)

Otherwise, it's recommended to follow a traditional three-tier approach to achieve a more scalable architecture

Planning, Design Guidelines and Best Practices

Planning for your Deployment

The following points summarize the design aspects for a typical Wired LAN that needs to be taken into consideration. Please refer to Meraki documentation for more information about each of the following items

- Hierarchical design traditional approach with 3 layers (access, aggregation, core) vs more common approach with 2 layers (access, collapsed core)

- Port density required on your access layer

- What port type/speeds are required on your MDF layer (GigE, mgigE, 10GigE, etc)

- Patching requirements between your IDF and MDF (Electrical, Multi-mode fibre, Single-mode fibre, etc)

- Number of stack members where applicable (this will influence your ether channels and thus number of ports on aggregation layer)

- Stackpower requirements where applicable (e.g MS390, C9300-M, etc)

- Port density required on your aggregation/collapsed-core layer

- Switching capacity is required on your aggregation/collapsed-core layer

- Consider using physical stacking on the access layer (typically useful if they are part of an IDF closet and cross-chassis port channeling is required)

- Layer 3 routing on Access layer (typically useful to reduce your broadcast domain and helps with fault isolation/downtime within your network)

- Calculate your PoE budget requirements (Which will influence your switch models, their power supplies and power supply mode)

- Check your Multicast requirements (IGMP snooping, Storm Control, Multicast routing, etc)

- If you require DHCP services on your access layer (distributed DHCP as opposed to centralized DHCP)

- End-to-end segmentation (Classification, Enforcement, etc.) using Security Group Tags requires MS390 Advanced License

- What uplink port speeds are required on the access layer (GigE, mgigE, 10GigE, etc)

- If any ports on your switch(s) that needs to be disabled

- On your access switches designate your upstream connecting ports (For non modular switches, e.g Ports 1-4)

- On your access switches, designate your Wireless LAN connecting ports (e.g Ports 5-10)

- On your access switches, designate your client-facing ports (e.g Ports 12-24)

- On your access switches, designate ports connecting to downstream switches (where applicable)

- On your access switches, designate ports that should provide PoE (e.g. Connecting downstream Access Points, etc)

- On your aggregation switches, designate ports connecting to upstream network (For non modular switches, e.g Ports 1-4)

- On your aggregation switches, designate ports connecting to downstream switches (e.g. Ports 10-16)

- On your switches, designate ports that should be isolated (e.g Restrict access between clients on the same VLAN)

- On your switches, designate ports that should be mirroring traffic (e.g Call recording software, WFM software, etc)

- On your switches, designate ports that should be in Trusted mode where applicable (For DAI inspection purposes)

- Choose your QoS mark and trust boundaries (i.e where to mark traffic, marking structure and values, trust or re-mark incoming traffic, etc)

- All client-facing ports should be configured as access ports

- Ports connecting downstream Access Points can either be configured as access (e.g NAT mode SSID, untagged bridge mode SSID, etc) or trunk (e.g tagged bridge mode SSID)

- Using Port Tags can be useful for administration and management purposes

- Using Port names can be useful for management purposes

- Do you require an access policy on your access ports (Meraki Authentication, external Radius, CoA, host mode, etc)

- What native VLAN is required on your access port(s) and will that be different per switch/stack?

- What management VLAN do you wish to use on your network? Will that be the same for all switches in the network or per switch/stack?

- Do you require a Voice VLAN on your access port(s)

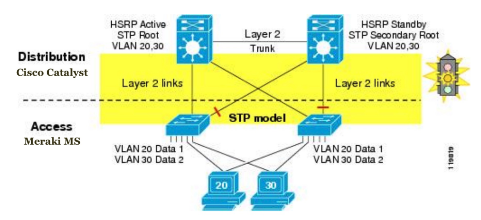

- Size your STP domain based on your topology, no more than 9 hops in total.

- Designate your root switch based on your topology and designate STP priority values to your switches/stacks accordingly

- Do not disable STP unless absolutely required (e.g speed up DHCP process, entailed by network topology, etc)

- Use STP guards on switch ports to enhance network performance and stability

- Use STP BPDU guard on client-facing access ports

- Disable STP BPDU guard on ports connecting downstream switches

- Use STP Root guard on downstream ports connecting switches that are not supposed to become root

- Use STP Loop guard in a redundant topology on your blocking ports for further loop prevention (e.g Receiving data frames but not BPDUs)

- Always enable auto-negotiation unless the other end does not support that

- Enable UDLD when supported on the other end (Also please refer to Meraki firmware changelog for Meraki switches)

- It is recommended to enable UDLD in Alert-only mode on point to point links

- It is recommended to enable UDLD in Enforce mode on multi-point ports (e.g two or more UDLD-capable ports are connected through one or more switches that don't support UDLD)

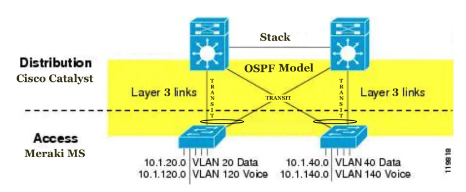

- If using Layer 3 routing, plan your OSPF areas and routing flow from one area to the other (OSPF timers, interfaces, VLANs, etc)

- If enabling Adaptive Policy, choose the assignment method (Static vs Dynamic via Radius) and SGT per access port and whether trunk port peers are SGT capable or not

- Do you need to tag Radius and other traffic in a separate VLAN other than the management VLAN? (Refer to Alternate Management Interface)

- Check your MTU considerations taking into account all additional headers (e.g AnyConnet, Other VPNs, etc)

- Switch ACL requirements (e.g IPv4 ACLs, IPv6 ACLs, Group policy ACLs, etc). Click here for more information about Switch ACL operation

- Switch security requirements (e.g DHCP snooping behavior, Alerts, DAI, etc)

- For saving energy purposes, consider using Port schedules

Installation, Deployment & Maintenance

General Guidance

- Have your Campus LAN design finalized in terms of L2 and L3 nodes as well as the SVIs required where applicable (Please refer to the below sections for guidance on the design elements)

- Start with the network edge and have your firewalls and routers (e.g. Meraki MX SD-WAN & Security Appliance) connected to the public internet and able to access the Meraki cloud (Check firewall rules requirements for cloud connectivity)

- Connect the aggregation switches with uplinks and get them online on dashboard so they can download available firmware and configuration files (Refer to the installation guide for your Meraki aggregation switches)

- Configure stacking for your aggregation switches and connect stacking cables to bring the stack online (Please follow stacking best practices)

- Enable OSPF where applicable and choose what interfaces should be advertised (Please refer to routing best practices)

- Connect access switches with uplinks and get them online on dashboard so they can download available firmware and configuration files (Refer to the installation guide of your Meraki access switches)

- Configure stacking for your access switches and connect stacking cables to bring the stack online (Please follow stacking best practices)

- Ensure that your security settings (e.g. Switch ACL) have been completed

- Connect access points with uplinks to your access switches and get them online on dashboard so they can download available firmware and configure files (Refer to the installation guide for your Meraki access point)

- Ensure that your switch QoS settings match incoming DSCP values from your APs

- Check your administration settings and adjust dashboard access as required (e.g. Tag based port access)

- Complete other settings in dashboard as required (e.g. Traffic analytics)

- Revisit your dashboard after 7 days to monitor activity and configure tweaks based on actual traffic profiles (e.g. Traffic Shaping on MR APs and switch QoS) and also monitor security events (e.g. DHCP snooping)

- Remember that Campus LAN design is like any other design process and should run in iterations for continuous enhancements and development

- For any Client VLAN changes, start from where your SVI resides (assuming its within the Campus LAN)

- For any native VLAN changes, start from. the lowest layer (e.g. Access Layer) working your way upwards. This will prevent losing access to downstream devices which might require Factory reset

- For any management VLAN changes, attempt to change your IP address settings to DHCP first allowing the switch to acquire an IP address in the designated VLAN automatically. When back online in Dashboard with the new IP address, change the settings to Static assigning the required IP address

- Any SVI or routing changes should be done in a maintenance window as it will result in a brief outage in traffic forwarding

- Always pay attention to platform specific requirements/restrictions. Please refer to the following sections below for further guidance

Redundancy & Resiliency

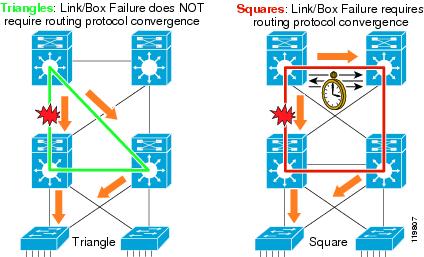

For optimum distribution-to-core layer convergence, build redundant triangles, not squares, to take advantage of equal-cost redundant paths for the best deterministic convergence. See the below figure for an illustration:

Redundant Triangles

The multilayer switches are connected redundantly with a triangle of links that have Layer 3 equal costs. Because the links have equal costs, they appear in the routing table (and by default will be used for load balancing). If one of the links or distribution layer devices fails, convergence is extremely fast, because the failure is detected in hardware and there is no need for the routing protocol to recalculate a new path; it just continues to use one of the paths already in its routing table.

Redundant Squares

In contrast, only one path is active by default, and link or device failure requires the routing protocol to recalculate a new route to converge.

General Guidance:

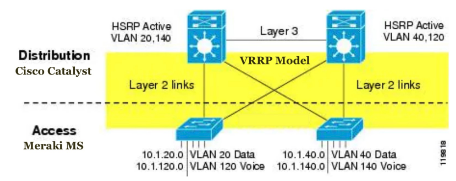

- Consider default gateway redundancy (where applicable) using dual connections to redundant distribution layer switches that use VRRP/HSRP/GLBP such that it provides fast failover from one switch to the other at the distribution layer

- Link Aggregation (Ether-Channel or 802.3ad) between switches And/Or switch stacks which provide higher effective bandwidth while reducing complexity and improving service availability

- Deploy redundant triangles as opposed to redundant squares

- Deploy redundant distribution layer switches (preferably stacked together)

- Deploy redundant point-to-point L3 interconnections in the core

- High availability in the distribution layer should be provided through dual equal-cost paths from the distribution layer to the core and from the access layer to the distribution layer. This results in fast, deterministic convergence in the event of a link or node failure

- Redundant power supplies to enhance the overall service availability

- High availability in the distribution layer is achieved through dual equal-cost paths from the distribution layer to the core and from the access layer to the distribution layer. (This results in fast, deterministic convergence in the event of a link or node failure).

The following Meraki MS platforms support Power Supply resiliency:

- MS250

- MS350

- MS355

- MS390

- MS420

- MS425

Meraki MS390 switches support StackPower in addition to Power resiliency and are in combined power mode by default

Firmware

General Guidance

- It’s always important to consider the topology of your switches as, when you drive closer to the network core and away from the access layer, the risk during a firmware upgrade increases

- For Large Campus LAN, it is recommended to start the upgrade closest to the access layer

- For Core switches, it is recommended to reschedule the upgrade to your desired maintenance window

- Staged Upgrades allows you to upgrade in logical increments (For instance, starting from low-risk locations at the access layer and moving onto the higher risk core)

- Firmware for MS switches is set on the network level and therefore all switches in that network will have the same firmware

- Major releases; A new major firmware is released with the launch of new products, technologies and/or major features. New major firmware may also include additional performance, security and/or stability enhancements

- Minor releases; A new minor firmware version is released to fix any bugs or security vulnerabilities encountered during the lifecycle of a major firmware release

- On average, Meraki deploys a new firmware version once a quarter for each product family

- Please plan for sufficient bandwidth to be available for firmware downloads as they can be large in size

- It is recommended to set the out-of-hours preferred upgrade date and time in your network settings for automatic upgrades (remember to set the network's timezone)

- You can also manually upgrade network firmware from Organization > Firmware Upgrades (Meraki will notify you 2 weeks in advance of the scheduled upgrade and, within this two week time window, you have the ability to reschedule to a day and time of your choice)

- Meraki MS devices use a “safe configuration” mechanism, which allows them to revert to the last good (“safe”) configuration in the event that a configuration change causes the device to go offline or reboot.

- During routine operation, if a device remains functional for a certain amount of time (30 minutes in most circumstances, or 2 hours on the MS after a firmware upgrade), a configuration is deemed safe

- When a device comes online for the first time or immediately after a factory reset, a new safe configuration file is generated since one doesn’t exist previously

- It is recommended to leave the device online for 2 hours for the configuration to be marked safe after the first boot or a factory reset.

Multiple reboots in quick succession during initial bootup may result in a loss of this configuration and failure to come online. In such events, a factory reset will be required to recover

General Recommendation

When upgrading Meraki switches it is important that you allocate enough time in your upgrade window for each group or phase to ensure a smooth transition. Each upgrade cycle needs enough time to download the new version to the switches, perform the upgrade, allow the network to reconverge around protocols such as spanning tree and OSPF that may be configured in your network, and some extra time to potentially roll back if any issue is uncovered after the upgrade.

Meraki firmware release cycle consists of three stages during the firmware rollout process namely beta, release candidate (RC) and stable firmware. This cycle is covered in more detail in the Meraki Firmware Development Lifecycle section.

Please note that Meraki beta is fully supported by Meraki Technical Support and can be considered as an Early Field Deployment release. If you have any issues with the new beta firmware you can always roll back to the previous stable version, or the previously installed version if you roll back within 14 days

The high-level process for a switch upgrade involves the following:

-

The switch downloads the new firmware (time varies depending on your connection)

-

The switch starts a countdown of 20 minutes to allow any other switches downstream to finish their download

-

The switch reboots with its new firmware (about a minute)

-

Network protocols re-converge (varies depending on configuration)

Meraki Firmware Version Status will show with one of the following options:

Each firmware version now has an additional Status column as follows:

- Good (Green) status indicates that your network is set to the latest firmware release. Minor updates may be available, but no immediate action is required.

-

Warning (Yellow) status means that a newer stable major firmware or newer minor beta firmware is available that may contain security fixes, new features, and performance improvements. We recommend that you upgrade to the latest stable or beta firmware version.

-

Critical (Red) status indicates that the firmware for your network is out of date and may have security vulnerabilities and/or experience suboptimal performance. We highly recommend that you upgrade to the latest stable and latest beta firmware release.

For more information about Firmware Upgrades, please refer to the following FAQ document.

MS390 Specific Guidance

- Meraki continues to develop software capabilities for the MS390 platform, therefore it is important to refer to the firmware changelog before setting a firmware for your network which includes MS390 switches.

- Please ensure that the firmware selected includes support for MS390 build.

- Also pay attention to the new features section as well as the known issues related to this firmware.

Staged Upgrades Guidance

- To make managing complex switched networks simpler, Meraki supports automatic staged firmware updates

- This allows you to easily designate groups of switches into different upgrade stages

- When you are scheduling your upgrades you can easily mark multiple stages of upgrades (e.g. Stage1, Stage2 and Stage3)

- Each stage has to complete its upgrade process before proceeding to the next stage

- All members of a switch stack must be upgraded at the same time, within the same upgrade window.

- You cannot select an individual switch stack member to be upgraded; only the entire switch stack can be selected

- Switch stacks upgrade behavior; each stack member rebooting close to the same time and the stack then automatically re-forming as the members come online

This feature is currently not supported when using templates

Firmware Upgrade Barriers

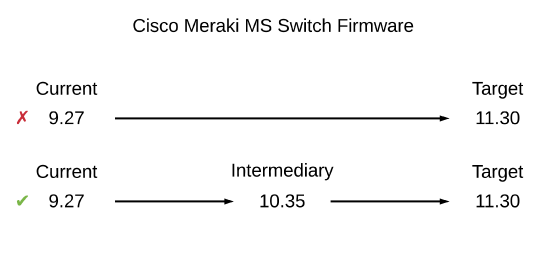

- Firmware upgrade barriers is a built-in feature to prevent certain upgrade paths on devices running older firmware versions trying to upgrade to a build that would otherwise cause compatibility issues.

- Having devices use intermediary builds defined by Meraki will ensure a safe transition when upgrading your devices.

Here is an example of when firmware upgrade barriers come into effect. You might find yourself in a situation where you are unable to upgrade a device for an extended period of time due to uptime or business requirements. There is a switch in the network that is running MS 9.27 and would like to update to the latest stable version, which at the time of writing, is 11.30. Attempting to upgrade from 9.27 to 11.30 will not be a selectable option in the dashboard and administrators will have to upgrade to 10.35 first.

In order to complete the upgrade from the current version to the target version, two manual upgrades will be required. The first from your current to the intermediary version, and another from the intermediary to your target version.

Meraki Switches per Dashboard Network

General Guidance

- It is recommended to keep the total number of Meraki switches (e.g. Access AND Distribution) in a dashboard network within 400 for best performance of dashboard.

- If switch count exceeds 400 switches, it is likely to slow down the loading of the network topology/ switch ports page or result in display of inconsistent output.

- It is recommended to keep the total switch port count in a network to fewer than 8000 ports for reliable loading of the switch port page

There is no hard limit on the number of switches in a network, therefore please take this into consideration when you are planning for the whole Campus LAN network.

Cabling

General Guidance

- It is recommended to use Category-5e cables for switch ports up to 1Gbps

- While Category-5e cables can support multigigabit data rates upto 2.5/5 Gbps, external factors such as noise, alien crosstalk coupled with longer cable/cable bundle lengths can impede reliable link operation.

- Noise can originate from cable bundling, RFI, cable movement, lightning, power surges and other transient events.

- It is recommended to use Category-6a cabling for reliable multigigabit operations as it mitigates alien crosstalk by design

- Please ensure that you are using Approved Meraki SFPs and Accessories per hardware model

Meraki will only support the Approved Meraki SFPs and Accessories for use with MS and MX platforms. A number of Cisco converters have also been certified for use with Meraki MS switches:

- SFP-H10GB-CU1M

- SFP-H10GB-CU3M

- SFP-10G-SR-S

- SFP-10G-SR

Power Over Ethernet (PoE)

General Guidance

- MS platforms allocate power based on the actual drawn power from the client device

MS390 Specific Guidance

- MS390s allocate power based on the requested power from the client device (as opposed to the actual drawn power).

- It is recommended to calculate your power budget based on the maximum power mentioned on the client device data sheet (e.g. MR56 consumes 30W).

- This is based on the power class advertised using Layer 2 discovery protocols (e.g. LLDP, CDP). Refer to the following table for more information on the power class and the corresponding power values:

| Class | Maximum Power Level |

|---|---|

| 0 (unknown class) | 15.4 W |

| 1 | 4 W |

| 2 | 7 W |

| 3 | 15.4 W |

| 4 | 30 W |

| 5 | 45 W |

| 6 | 60 W |

| 7 | 75 W |

| 8 | 90 W |

IP Addressing and VLANs

General Guidance

- All Meraki MS platforms switchports are configured in Trunk mode with Native VLAN 1 by default with Management VLAN 1

- Even if it is undesirable to use Native VLAN 1, it is recommended to use it for provisioning the switches for ZTP purposes. Once the switches/stacks are online on dashboard in running steady, you can then change the Management VLAN as required. Remember to change port settings downstream first to avoid losing access to switches.

- Assign a dedicated management VLAN for your switches which has access to the Internet (More info here)

- Avoid overlapping subnets as this may lead to inconsistent routing and forwarding

- Dedicate /24 or /23 subnets for end-user access

- Do not configure a L3 interface for the management VLAN. Use L3 interfaces only for data VLANs. This helps in separating management traffic from end-user data

All MS platforms (excluding MS390) use a separate routing table for management traffic. Configuring a Management IP within the range of a configured SVI interface can lead to undesired behavior. The Management VLAN must be separate from any configured SVI interface.

- Unrequired VLANs should be manually pruned from trunked interfaces to avoid broadcast propagation.

- If you require that your Radius, Syslog or SNMP traffic to be encapsulated in a separate VLAN (that is not necessarily exposed to the internet) then consider using the Alternate Management Interface on MS. Please refer to the table below for this feature compatibility:

| MS Switch Family | MS Switch Model | MS Firmware Support (first supported on) |

| MS2xx | MS210 | MS14.5 |

| MS225 | MS14.5 | |

| MS250 | MS14.5 | |

| MS3xx | MS350 | MS14.5 |

| MS355 | MS14.5 | |

| MS390 | MS15 | |

| MS4xx | MS410 | MS14.5 |

| MS425 | MS14.5 | |

| MS450 | MS14.5 |

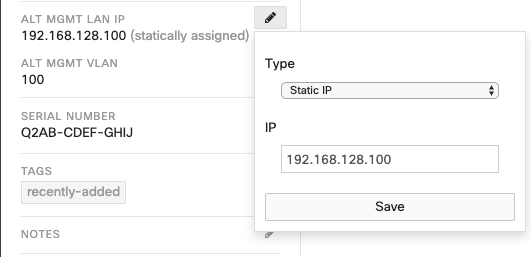

The Alternate Management Interface (AMI) functionality is enabled at a per-network level and, therefore, all switches within the Dashboard Network will use the same VLAN for the AMI. The AMI IP address can be configured per switch statically as shown below:

Please note that the subnet of the AMI (the subnet mask for the AMI IP address) is derived from Layer-3 interface for the AMI VLAN, if one has been configured on the switch. In the absence of a Layer-3 interface for the AMI VLAN, each switch will consider its AMI to be /32 network address

Layer 3 routing must be enabled on a switch for its AMI to be activated

MS390 Specific Guidance

- The default active VLANs on any MS390 port is 1-1000. This can be changed via local status page or in dashboard (See note below)

- Please ensure that the MS390 switch/stack has a maximum of 1000 VLANs

- The total number of VLANs supported on ANY MS390 switch port is 1000

For example, If you have an existing stack with each port set to Native VLAN 1, 1-1000 and the new member ports are set to native VLAN 1; allowed VLANs: 1,2001-2500 then your total number of VLAN in the stack will be 1000(1-1000)+500(2001-2500) = 1500. Dashboard will not allow the new member to be added to the stack and will show an error.

To utilize any VLANs outside of 1-1000 on an MS390, the switch or switch stack must have ALL of its trunk interfaces set to an allowed vlan list that contains a total that is less than or equal to 1000 VLANs, including any of the module interfaces that are not in use. Here's a quick way to do that.

MS390 Stacking Specific Guidance

- Please refer to the MS390 Stacking guidance provided below

DHCP

General Guidance

- DHCP is recommended for faster deployments and zero-touch

- It is recommended to fix the DHCP assignments on the DHCP server as this will ensure that other network applications (e.g. Radius) will always use the same source IP address range (i.e. the Management/AMI VLAN)

- Static IP addressing can also be used however to minimize initial provisioning it's recommended to use DHCP for initial setup, then change IP addressing from dashboard. Meraki MS switches will attempt to do DHCP discovery on all supported VLANs.

Please refer to the stacking section for further guidance on IP addressing when using switch stacks

MS390 Specific Guidance

- When installing an MS390, it is important to ensure that any DHCP services or IP address assignments used for management fall within the active VLAN range (1-1000 by default, unless changed via the local status page or dashboard)

- If you require using Static IP addressing (OR an IP Address outside of the default active VLANs 1-1000) please connect each MS390 switch with an uplink to the Meraki dashboard and upgrade firmware to latest stable prior to changing any configuration. Once the switch upgrades and reboots, you can now change the management IP as required (please ensure the upstream switch/device allows this VLAN in its port configuration).

MS390 Stacks Specific Guidance